![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Archives & Museum Informatics

158 Lee Avenue

Toronto Ontario

M4E 2P3 Canada

ph: +1 416-691-2516

fx: +1 416-352-6025

info @ archimuse.com

www.archimuse.com

| |

Search A&MI |

Join

our Mailing List.

Privacy.

published: March 2004

analytic scripts updated:

October 28, 2010

Web Preservation Projects at Library of Congress

Abigail Grotke and Gina Jones, Library of Congress, USA

Abstract

The Library of Congress' mission is to make its resources available and useful to the Congress and the American people and to sustain and preserve a universal collection of knowledge and creativity for future generations.

An ever-increasing amount of the world's cultural and intellectual output is presently created in digital formats and does not exist in any physical form. Such materials are colloquially described as "born digital." This born digital realm includes open access materials on the World Wide Web.

The MINERVA Web Preservation Project was established to initiate a broad program to collect and preserve these primary source materials. A multi disciplinary team of Library staff representing cataloging, legal, public services, and technology services is studying methods to evaluate, select, collect, catalog, provide access to, and preserve these materials for future generations of researchers.

This paper will provide a report on the Library of Congress Web harvesting activities, describing experiences to date selecting, capturing, and providing access to topic-based collections, including U.S. Presidential Candidate Election 2000, September 11, 2001, the 2002 Olympics, and the 2002 Election.

Keywords: Web archiving, born digital, preservation, digital library, MINERVA

Introduction

Since the launch of the Library of Congress Web site in 1994, LC has been on the forefront of the creation of digital library content, most notably with its American Memory program, converting historical materials from analog to digital form and presenting them on the Web. But with all this creation of content for and on the Web, there had been little discussion at the Library about how to save the growing number of Web sites that were being created around the world. Other National Libraries had already begun work to archive their own countrys Web resources, with different approaches: the National Library of Australia began selective collecting in 1996 with their PANDORA archive (http://pandora.nla.gov.au/index.html), and the Swedish Royal Librarys Kulturaw3 project (http://www.kb.se/kw3/ENG/Default.htm) began bulk collecting of Swedish Web sites. Although the Internet itself was still in its infancy, the Library and archival community had already begun to recognize the need to preserve these at-risk materials for researchers and future generations, for the frequency in which Web sites were created and disappeared was astonishing.

The time for the Library of Congress to act had come.

An ever-increasing amount of primary source materials are being created in digital formats and distributed on the web, and do not exist in any other form. Future scholars will need them to understand the cultural, economic, political and scientific activities of today and, in particular, the changes that have been stimulated by computing and the Internet. the National Research Council [has] emphasized the important role of the Library of Congress in collecting and preserving these digital materials. A Digital Strategy for the Library of Congress praised the Librarys plans to address the challenges and urged the Library to move ahead rapidly (Arms, January 2001).

In the summer of 2000, a team was formed with staff from across the Library of Congress cataloging, legal, public services, and technology services to embark upon a study to evaluate, select, collect, catalogue, provide access to, and preserve electronic resources on the World Wide Web for future generations of researchers. The outcome of this study was two reports on the Web Preservation Project (an interim dated January 15, 2001, and a final onr, September 3, 2001) written by consultant William Arms of Cornell University. Arms describes in detail the pilot project, nicknamed MINERVA (Mapping the Internet: Electronic Resources Virtual Archive). Using HTTrack mirroring software, a small number of sites were selected and snapshots of sites were downloaded to the Librarys servers. OCLCs Cooperative Online Research Cataloguing service (CORC) was used to created catalog records for the sites, and the records were loaded into the Librarys ILS system for access. User testing and quality studies were performed and reported upon in Armss final report.

Fig. 1: MINERVA Web site (http://www.loc.gov/minerva)

About the same time, a parallel project was initiated by the Library of Congress to capture Web sites relating to the Fall 2000 presidential election, using the crawling services of the Internet Archive, a 501(c)(3) public nonprofit (see Partners below). It was a practical test case for a large-scale collection effort using the Internet Archive.

What Have We Learned?

Archiving the Web, or even portions of the Web, is no easy task, as the library community, including the Library of Congress, soon discovered. Many questions were raised during this period, and the MINERVA Web preservation project team continues to explore answers and options.

Which sites should be collected?

Based on recommendations stemming from the pilot and experiences to date, the Library has opted to perform selective collecting at this time, with a focus on event-based collections. Once an event is selected, a collection strategy for it is mapped out and categories for collection are defined. Recommending officers suggest sites relating to the event, and a technical team reviews sites to ensure that the crawler will grab the relevant content (see technical issues below).

The Library is currently drafting a formal collection policy statement for Web archiving, and is expanding activities in FY03 to include subject-based collecting rather than exclusively event-based. Examples include the 107th Congress sites recently captured, or federal government or state government Web sitesto complement a Mellon-funded project from the California Digital Library.

How often should snapshots be made?

The Library has tested a variety of frequencies of capture, from a one-time capture of the 107th Congress sites to a once-every-hour capture on Election Day 2002. The frequency depends on the content on the page or broad categories of types of sites (press, individual, government), and varies depending on the type of site and event that is being archived.

What constitutes a Web site? What will researches expect when they visit a Web archive?

The MINERVA team contemplates the answer to this basic question the definition of a Web site. How deep does a site go? How much should we capture or expect to capture, in order to replicate the experience of a Web site? How much will researchers want in order to make the archive useful to them? For now, we study the statistics of the crawls that we do, improve the quality as we can, and understand that there are limitations that must be anticipated, so we are ready to alert users of the archive to the limitations.

What technical challenges do we face? Are we getting what we want?

Archiving Web sites is not an exact science. The crawlers used for these activities have been superb tools for large-scale collection capture. However, there are limitations in their capability. Some examples weve discovered:

-

Macromedia FlashTM sites are captured, but the program is not alterable. If the FlashTM program provides the initial site navigation for the user, it will send the users to the live Web site or to a 404-not found if the site no longer exists.

-

Sites that require log-ins, either free or pay sites, will not be crawled more in-depth than what the crawler can get to without logging in. Recent disappointing experiences with press sites, particularly in the Election 2002 collection, have caused us to rethink our approach to such sites.

-

Due to crawling technology and the structure of dynamic Web sites, content on dynamically-generated Web pages is not captured.

-

The crawler will generally not be successful in crawling a site that has dynamic menus or menus/links that are created through a script rewrite.

Related to the breadth and depth of Web sites, there are issues that we are just beginning to understand. Weve been able to spend more time recently reviewing crawling statistics for the archives and performing time-consuming quality review of the sites that weve collected. As we learn more about crawling technology, well be better able to refine our activities to get the most value out of a particular archived site.

How do we provide access to archived collections? What level of access do we provide?

Its one thing to collect Web sites; its another thing to make them available to researchers. Cataloging, interface, and access issues must all be addressed.

Cataloging

The MINERVA prototype experimented in the cataloging of Web sites on the item level and on the collection level (for a group of sites), using OCLCs Cooperative Online Research Cataloging service (now apart of OCLC's Connexion service (http://www.oclc.org/connexion/). The Library will continue provide collection level records for inclusion in the ILS, and is planning to assign more in-depth descriptive cataloguing at the Web site level for selected sites, based on the Library of Congress Metadata Object Description Schema (MODS) (http://www.loc.gov/standards/mods/) for the Olympics 2002, Election 2002, and selected portions of the September 11 Web Archive. Metadata includes:

-

Name (Creator or issuing publisher)

-

Abstract

-

Capture date

-

Genre

-

Physical Description/Format

-

Language

-

Subject

-

Access Conditions

Additionally, machine generated administrative and preservation metadata for each base URL in the collection will enable the Library to provide long-term preservation of the collection. These include:

-

URL

-

Internet-protocol (IP) address

-

owner or creator

-

date and time of collection

-

file content type

-

archive length

Some of the cataloging continues to be performed in-house, while the Library has contracted with WebArchivist.org to perform cataloging work based on Library of Congress specifications. Cataloging of individual sites is a time-consuming effort, and automatic processes must be balanced against human cataloging to ensure a proper level of access for potentially massive amounts of data.

Access and Interface

The prototype was made available on campus at the Library of Congress ,and records were included in the Library's catalog. In addition, the Election 2000 Web Archive and the September 11 Web Archive have been made available through the Internet Archive, with collection information available on the MINERVA Web site, including a link to the respective archive. WebArchivist.org created a model interface to the September 11 Web Archive (http://september11.archive.org/), which was initially released on October 11, 2001, and redesigned in September 2002. Further interface issues will be addressed with the release of candidate Web sites as a part of the Election 2002 Web Archive in March 2003.

In the coming year, the Library of Congress will be working on the interface and display of these archived materials. The MINERVA Web preservation project team, with assistance from the Librarys Information Technology office, has been exploring means of indexing archived Web sites based on recommendations from the prototype reports, and has been discussing interface options for display of these materials with a variety of Library experts. IT staff are working closely with Internet Archive to ensure proper transfer of materials from the acquisitions agent to Library of Congress servers, and have assisted with technical issues related to this work.

What are the legal challenges?

The MINERVA pilot explored, with support from the Office of the General Counsel, issues relating to copyright and legal issues, and further discussions and recommendations have followed for each of the ensuing collections.

While preserving open access materials from the Web falls within the Library of Congresss mission to collect and preserve the cultural and intellectual artifacts of today for the benefit of future generations" (Arms, January 2001), the Library doesnt explicitly have the right to do so under the current copyright law.

The Copyright Office is working with the Library to make its requirements clear, with the goal in mind to propose to Congress an amendment to Section 407 of the Copyright Act to permit downloading of open access materials that are on the Internet. Arms's final report included a desire for the importance of the Library extending its view of copyright deposit to include web materials; however, new regulations will be needed to implement them, such as revising the definition of best edition. (Arms, September 2001).

The Library's legal counsel has determined that the Library of Congress has the right to acquire these materials for the Library's collections and the right to serve these materials to researchers who visit the Library. The Librarys mission states, The Congress has now recognized that, in an age in which information is increasingly communicated and stored in electronic form, the Library should provide remote access electronically to key materials. With remote access in mind, the Library has adopted an opt-in/opt-out policy to allow content providers the ability to opt-out of any remote presentations of Web archives. As a site is harvested, a notification is sent to the content provider notifying it of our activities. An example of this from the Election 2002 Web Archive -- follows:

On behalf of the United States Library of Congress, the Internet Archive will be harvesting content from your website at regular intervals during the 2002 election cycle for inclusion in the Library's Election 2002 historic Internet collection. This collection is a pilot project of the Library in connection with its mandate from Congress to collect and preserve ephemeral digital materials for this and future generations. In March 2003, the Library will make this collection available to researchers onsite at Library facilities. The Library also wishes to make the collection available to offsite researchers by hosting the collection on the Library's public access website. The Library hopes that you share its vision of preserving Web materials about Election 2002 and permitting researchers from across the world to access them. If you do not wish to permit offsite access to your materials through the Library's website, please email the Library's MINERVA Project (Mapping the Internet Electronic Resources Virtual Archive) at: election2002@loc.gov at your earliest convenience.We thank you in advance for your cooperation.The Library of Congress

Once the collections are prepared for access, the wishes of content providers that opt-out of remote access to their archived sites will be granted, and the sites will only be available to researchers on-site at the Library of Congress. Catalog records for these items may be viewed remotely, if technically feasible.

Partners

Internet Archive (http://www.archive.org)

The Internet Archive has been a major partner in the collection and development of the Librarys Web archives to date. The Library has contracted with the Internet Archive to harvest sites for the three collections reviewed in detail later in this paper, as well as three additional collections currently underway. Founded in 1996, the Archive has been receiving data donations (over 100 terabytes to date) from Alexa Internet and others to build their digital library of Internet sites. (http://archive.org/about/about.php).

The Internet Archive has used technology available under partnership with Alexa Internet to harvest, manage, and provide access to the Librarys Web archive data. And as stated before, two collections (Election 2000 and September 11) are temporarily available for viewing at the Internet Archive Web site as production on these collections continues at the Library of Congress to make them available from our site.

Alexa Internet, in cooperation with the Internet Archive, designed the Wayback Machine, a three dimensional index that allows browsing of web documents over multiple time periods. (http://www.archive.org) This tool was made available to users on October 2001 to search and navigate the Internet Archives "Internet Library." The technology has been subsequently made available to the Library of Congress to help provide access the Librarys Web archive collections.

In terms of crawler technology used in the creation of the Librarys Web archive, Compaq Computer, working with Internet Archive, undertook the task of collecting and archiving sites for the Election 2000 Web Archive. Internet Archive collected and archived Web sites for the Olympics 2002 Web Archive and September 11th Web Archive and has participated with the Library on its three most recent projects: Election 2002, September 11 Remembrance, and the 107th Congress.

WebArchivist.org: University of Washington and the State University of New York, Information Technology (SUNYIT)

WebArchivist.org's mission is To develop systems for identifying, collecting, cataloguing and analyzing large-scale archives of Web objects. (http://www.webarchivist.org) This organization has partnered with the Library of Congress on its Web archiving projects currently underway, including the September 11th Web Archive, and the Election 2002 Web Archive.

The Web Archives

Detailed information is provided below on three collections that the Library has produced to date, providing a basic analysis of each collection and the objects within them, including:

General Information: Covers the collection in a general sense by providing the objective, a brief analysis of the Web site collection composite, period and frequency of crawl, and any other pertinent collection information.

Detailed Collection Information: Provides a detailed sense of the Web site collection composite, the crawlers activities for the URLs in the collection, and the extent of the Librarys quality review process during the crawl period.

Collection File Analysis: Provides, as best can be determined during this initial review period, the detailed collection composite.

Additional information about cataloging or interface design is provided below if available.

Note: For the following collections, staff was limited and could generally perform site selection only during crawl periods. No quality review was done on the collections during the time of the crawl, nor was it possible to investigate and resolve the not found and the redirect error codes. Statistics were limited in these early days, and it is difficult to characterize these Web collections with any certainty for link rot (links that no longer work) or other associated Not Found analysis. A good object is any object returned with a found HTTP code. Recently, more staff has been hired and statistics are improving, so we anticipate knowing more about future collections and being able to correct problems during the testing and collection period, rather than examining after the fact.

Election 2000

The Election 2000 Collection is important because it contributes to the historical record of the U.S. presidential election, capturing information that could otherwise have been lost. With the growing role of the Web as an influential medium, records of historical events such as the U.S. presidential election could be considered incomplete without materials that were "born digital" and never printed on paper. Because Internet content changes at a very rapid pace, especially on those sites related to an election, many important election sites have already disappeared from the Web. For the Election 2000 Collection, rapidly changing sites were archived daily, or even twice and three times in a day, in an attempt to capture the dynamic nature of Internet content. "This was the first presidential election in which the Web played an important role, and there would have been a gap in the historical record of this period without a collection such as this," said Winston Tabb, Associate Librarian for Library Services of the Library of Congress. (http://www.loc.gov/today/pr/2001/01-091.html)

General Information

The Election 2000 Web Archive, the first Web collection developed by the Library, consists of 800 gigabytes of archived digital materials and is a selective collection of nearly 800 sites archived daily between August 1, 2000, and January 21, 2001, covering the United States national election period.

The Library of Congress commissioned the Internet Archive to assist the Library in the harvesting of sites. Compaq Computer Corporation, using the Mercator crawler, captured and stored all the data. The Election 2000 Collection was made available to the public in June of 2001 and is accessible online at the Internet Archive at the following URL: http://web.archive.org/collections/e2k.html.

The Library has received the archive and is processing it for long-term preservation and access. Once processing and production of a Library interface for the collection is complete, the Election 2000 Web Archive will be made available to the public from the MINERVA Web site.

Collection Interface

While the Election 2000 Web Archive is hosted by the Internet Archive, it is searchable by date, URL, and by category via the Wayback Machine.

Detailed Collection Information

The 797 sites in the archive consist of the following:

|

39 Conservation District 86 Democratic Party 6 Government 11 Green Party 24 Humor & Criticism 11 League of Women Voters 45 Libertarian Party 38 News |

167 Other Election 2000 Sites 52 Presidential and Party Candidate 63 Reform Party 60 Republican Party 51 U.S. House 130 U.S. Senate 14 Vote Trading |

Chart 1: General statistical overview of the Election 2000 Web Archive.

|

URLS/web sites |

797 |

|

Crawl Dates |

August 1, 2000 to January 21, 2001 |

|

Crawler |

Internet Archive/Compaq Mercator |

|

Crawl Periodicity |

Daily |

|

HTTP Code 200 |

72,135,149 objects |

|

HTTP Code 400 |

12,642,026 |

|

HTTP Code 300 |

2,646,940 |

|

HTTP Code 500 |

56,804 |

Chart 2: HTTP return codes for all objects in the collection.

See Appendix A for a complete listing of the Election 2000 Web Archive HTTP codes and a link to a description of codes.

Collection File Analysis

Upon completion of the five-month crawl, the Election 2000 Web Archive consisted of 72,135,149 (the HTTP Return Code 200), valid (good) objects collected. Internet Archive did a cursory pre-shipment de-duping -- where duplicate documents are deleted from the collection -- and removed 59,429,760 duplicate objects. Despite this, some duplicates remain: the Election 2000 Web Archive consists of 12,705,359 valid objects; however, a checksum analysis of the archive files indicates that of the 12,705,359 valid objects, only 9,972,695 are unique objects.

Chart 3 provides a breakdown of the Election 2000 Web Archive file formats for HTTP Code 200. The majority of the archive format is document type information files (HTML, Word, PDF, RTF) and images files, probably associated with the document type files. File formats for this collection are as follows:

|

File Format |

Count (12,705,359) |

Unique (9,972,695) |

|

HTML/TEXT/RTF |

11,134,391 (88%) |

9,246,786 (92%) |

|

JAVASCRIPT/CSS |

1215 (>1%) |

248 (>1%) |

|

MS/WORD |

9225 (>1%) |

2897 (>1%) |

|

|

74,365 (>1%) |

21,664 (>1%) |

|

IMAGES |

739,228 (6%) |

690,233 (7%) |

|

AUDIO |

29,045 (>1%) |

7,019 (>1%) |

|

VIDEO |

5,031 (>1%) |

1,489 (>1%) |

|

ZIP |

1,437 (>1%) |

294 (>1%) |

|

FLASH |

0 (>1%) |

0 (>1%) |

|

XML |

557 (>1%) |

105 (>1%) |

|

OTHER |

7,268 (>1%) |

1,960 (>1%) |

Chart 3: Election 2000 Web Archive file formats for HTTP Code 200.

Cataloging and Access

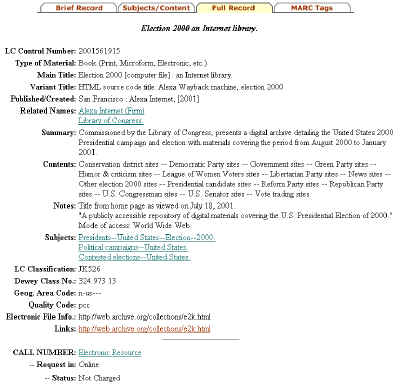

Election 2000 has a collection-level catalog record (Fig. 2) Currently the record points to the Internet Archive copy of the Collection. Due to time constraints and higher priorities, there are no current plans to provide in-depth descriptive cataloging at the website level. Full Web site/URL lists will be provided by the Library to provide access to the archive. Additionally, the archive may be fully indexed by a search engine when it is available from the Library's Web site.

Fig 2. Snapshot of the Election 2000 catalog record (full record).

September 11th

"It is the job of a library to collect and make available these materials so that future scholars, educators and researchers can not only know what the official organizations of the day were thinking and reporting about the attacks on America on Sept. 11, but can read the unofficial, 'online diaries' of those who lived through the experience and shared their points of view," said Winston Tabb, Associate Librarian for Library Services. "Such sites are very powerful primary source materials."

"The Internet is as important as the print media for documenting these events," said Diane Kresh, the Library's Director of Public Service Collections. "Why? Because the Internet is immediate, far-reaching, and reaches a variety of audiences. You have everything from self-styled experts to known experts commenting and giving their viewpoint." (http://www.loc.gov/today/pr/2001/01-150.html)

General Information

The MINERVA team had completed the Election 2000 Web Archive, and had begun discussions with WebArchivist.org and the Internet Archive about the potential for an Election 2002 collection. As preparations were underway, the tragic events of September 11, 2001, occurred, prompting Web creators around the world to respond by creating memorial sites, tribute pages and survivor registries. Corporations and non-profits solicited donations for charity. News sites from countless countries dedicated their resources to reporting the disaster and its aftermath, and government sites sought to inform and reassure the people. The Library of Congress and its partners scrambled to start collecting this content that sprang up immediately. Sites were selected by Recommending Officers at the Library of Congress, by the Internet Archive,by WebArchivist.org, and by the public. This archive consists of over 30,000 Web sites.

Access

On October 11, 2001, the September 11 Web Archive was released to the public.

The September 11 Web Archive is currently hosted by the Internet Archive at http://september11.archive.org/. WebArchivist.org developed and published for public access the user interface found on the september11.archive.org Web site (Fig. 3).

Fig. 3: Snapshot of the September 11 Web Archive, October 2001.

The Library has received the archive and is processing it for long-term preservation and access. Once processing and production of the Library's interface for the archive is complete, the September 11 Web Archive will be made available to the public from the Library's MINERVA Web site.

Collection Interface

While the collection is hosted by the Internet Archive, it is searchable by date, by URL, and by category via the Wayback Machine.

Detailed Collection Information

Chart 4 provides a general statistical overview of the September 11th Collection HTTP return codes for all objects in the archive.

|

URLS |

30,000+ |

|

Crawl Dates |

September 11, 2001- 6 December, 2001 |

|

Crawler |

Internet Archive/ALEXA crawler |

|

Crawl Periodicity |

Daily |

|

HTTP Code 200 |

331,299,192 objects |

|

HTTP Code 400 |

15,925,789 |

|

HTTP Code 300 |

18,762,095 |

|

HTTP Code 500 |

1,634,926 |

Chart 4: HTTP return codes for objects in the September 11 Web Archive.

See Appendix A for a complete listing of the HTTP codes and a link to a description of codes.

Collection File Analysis

At the end of the three-month crawl, the September 11th Web Archive consisted of 331,299,187 (the HTTP Return Code 200) valid (good) objects. The Library's contractual requirement included a complete de-duping of the collection, because of the enormous size (5 terabytes of data). Using a checksum analysis of the Collection, after de-duping the Library's September 11th Collection consists of 55,224,374 valid unique objects, providing a reduction of size from 5 terabytes of data to 1 terabyte.

Chart 5 provides a breakdown of the file formats for HTTP Code 200. The majority of formats are document type information files (HTML, Word, PDF, RTF); however, this archive has a greater percentage than the other Library Web archives of graphic formats. File formats areas follows:

|

File Format |

Count (331,299,187 ) |

Unique (55,224,374) |

|

HTML/TEXT/RTF |

192,565,878 (60%) |

45,651,531 (82%) |

|

JAVASCRIPT/CSS |

3,238,926 (1%) |

617,426 (1%) |

|

MICROSOFT APPS |

1,028,754 (>1%) |

66,673 (>1%) |

|

|

4,560,763 (1%) |

484,662 (>1%) |

|

IMAGES |

126,829,266 (38%) |

7,883,425 (15%) |

|

AUDIO |

877,372 (>1%) |

150,274 (>1%) |

|

VIDEO |

208,492 (>1%) |

32,587 (>1%) |

|

ZIP |

217,659 (>1%) |

29,616 (>1%) |

|

FLASH |

245,936 (>1%) |

14,313 (>1%) |

|

XML |

61,173 (>1%) |

5,901 (>1%) |

|

OTHER |

1,462,492 (>1%) |

287,453 (>1%) |

Chart 5: September 11th Web Archive file formats for HTTP Code 200.

Cataloging

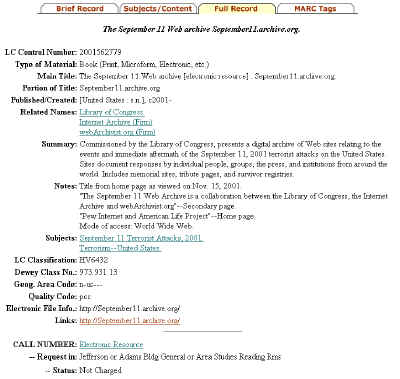

A collection-level bibliographic record (Fig. 4) currently points to the Internet Archive.

WebArchivist.org is working with the Library to create catalog records for 2,500 of the 30,000 Web sites. The remaining sites will be searchable using the Wayback Machine.

Fig. 4: Snapshot of the bibliographic record for the September 11 Web Archive.

Olympics 2002 Web Archive

General Information

The Olympics 2002 Web Archive presents a daily snapshot of 70 Web sites selected by the Recommending Officer for sports at the Library of Congress, captured generally during 1-2 A.M. Pacific Time by the Internet Archive. The 2002 Olympics were selected because they were held in the United States.

Detailed Collection Information

Chart 6 provides a general overview of the Olympics 2002 Web Archive. The sites in the collection consist of the Olympic 2002-related Web sites of the United States Olympic Committee, the official site for Salt Lake 2002, and the official Olympics Web site, plus 67 worldwide press Web sites.

The Library's contractual requirement did not include a requirement for a de-duping of the collection due to its small size. The Olympics 2002 Web Archive is not currently available remotely or onsite at the Library of Congress. The Library has received the archive and is processing it for long-term preservation and access, and is working on interface and access issues.

|

URLS |

70 |

|

Crawl Dates |

February 7, 2002 February 27, 2003 |

|

Crawler |

Internet Archive/ALEXA crawler |

|

Crawl Periodicity |

Daily |

|

HTTP Code 200 |

18,445,502 objects |

|

HTTP Code 400 |

420,591 |

|

HTTP Code 300 |

2,157,116 |

|

HTTP Code 500 |

4,680 |

Chart 6: HTTP Olympics 2002 Web Archive return codes.

See Appendix A for a complete listing of the HTTP codes and a link to a description of codes.

Collection File Analysis

Of the 18,445,502 valid objects returned for this three-week collection, there were 6,696,026 valid unique objects. Approximately 85% of the archive consists of document type information files (HTML, Word, PDF, RTF). File formats for this archive are as follows:

|

File Format |

Count (18,445,502 ) |

Unique (6,696,026) |

|

HTML/TEXT/RTF |

15,281,190 (83%) |

5,679,313 (85%) |

|

JAVASCRIPT/CSS |

386,171 (2%) |

279,792 (4%) |

|

MICROSOFT APPS |

2118 (>1%) |

729 (>1%) |

|

|

28,099 (>1%) |

10,545 (>1%) |

|

IMAGES |

2,666,032 (15%) |

690,233 (10%) |

|

AUDIO |

50,579 (>1%) |

21,361 (>1%) |

|

VIDEO |

10,773 (>1%) |

5,608 (>1%) |

|

ZIP |

1,512 (>1%) |

759 (>1%) |

|

FLASH |

5046 (>1%) |

1630 (>1%) |

|

XML |

55 (>1%) |

52 (>1%) |

|

OTHER |

11,694 (>1%) |

4,579 (>1%) |

Chart 7: Olympics Archive file formats for HTTP Code 200.

Because the Olympics 2002 Web Archive consists primarily of worldwide news and newspaper Web sites, the sites tend to consist of online graphical advertisements which change at least daily and may explain why the duplicate count of images was low.

Cataloging

Cataloging is currently underway for the Olympics 2002 Web Archive. The Library will provide a collection level record and is planning to assign more in-depth descriptive cataloging at the Web site level, based on the Library of Congress Metadata Object

Description Schema (MODS)

(http://www.loc.gov/standards/mods/).

Machine-generated administrative and preservation metadata for each base URL in the collection is also being generated.

Additional Collections In Production

During 2002, the Library commissioned the following crawls:

Election 2002: A Web archive of United States Senate and House, state gubernatorial and city mayoral election contests. There are approximately 4,000 Web sites in the collection, ranging from candidate, newspaper, advocacy, party, government, and other sites. The crawl began in August 2002 and ended on December 1, 2002. Cataloging, interface design, quality review and finalizing the copyright notification process are underway. The collection of candidate Web sites will be available remotely in March 2003; remaining materials by September 2003. Partners include WebArchivist.org, Internet Archive, and the Pew Internet & American Life Project.

September 11 Remembrance: A Web archive of approximately 2,000 Web sites having content related to the September 11 anniversary, crawled from 11 September 2002 to18 September 2002. Quality review is currently being conducted. At this point, there is no timeframe for making this archive available remotely or onsite at the Library of Congress, although some integration with the existing September 11 Web Archive is anticipated.

The 107th Congress: A one-time crawl of the Web sites of the 107th Congress during the week of 15 December 2002. A quality review is currently underway. The MINERVA team is investigating cataloging and access to these materials.

Conclusions

This paper has provided an update of Web preservation projects at the Library of Congress to date. With over 35,000 sites archived since 2000, the Library of Congress has come along way -- much has been learned about the selection, harvesting, and cataloging of Web sites since William Arms and the MINERVA Web preservation team began their investigations. The future will bring new challenges and collaborations: a pilot with OCLC to test cataloging tools, testing of new standards (METS/MODS), partnerships with experts and others in the archival and library community, research and development, and long term preservation issues. The Library of Congress recognizes the urgency of the potential loss of at-risk material, while understanding the need to take a careful look at the challenges (technical and otherwise) that we face.

Today, information technologies that are increasingly powerful and easy to use, especially like those that support the World Wide Web, have unleashed the production and distribution of digital information. Such information is penetrating and transforming nearly every aspect of our culture. If we are effectively to preserve for future generations the portion of this rapidly expanding corpus of information in digital form that represents our cultural record, we need to understand the costs of doing so and we need to commit ourselves technically, legally, economically and organizationally to the full dimensions of the task. Failure to look for trusted means and methods of digital preservation will certainly exact a stiff, long-term cultural penalty. (Task Force on Archiving of Digital Information, 1996)

References

Arms, W. Y. (2001) Web Preservation Project Final Report. Retrieved January 15 2003, from http://www.loc.gov/minerva/

Arms, W. Y. (2001) Web Preservation Project Interim Report. Retrieved January 15, 2003, from http://www.loc.gov/minerva/

National Library of Australia. PANDORA Archive. Consulted January 10, 2003, from http://pandora.nla.gov.au/index.html

National Research Council. ,Committee on an Information Technology Strategy for the Library of Congress, Computer Science and Telecommunications Board, Commission on Physical Sciences, Mathematics, and Applications, (2000). LC21: A Digital Strategy for the Library of Congress. National Academy Press. Washington, D. C. Consulted January 15, 2003, from http://www.nap.edu/books/0309071445/html/

Sweden. Royal Library. Kulturarw3. Consulted January 10, 2003, from http://www.kb.se/kw3/ENG/Default.htm

Task Force on Archiving of Digital Information. (1996). Preserving Digital Information: Report of the Task Force on Archiving of Digital Information / commissioned by the Commission on Preservation and Access and the Research Libraries Group, Inc.. Consulted January 10, 2003. http://www.rlg.org/ArchTF/tfadi.index.htm

United States. Library of Congress. (2001). Library of Congress and Alexa Internet Announce Election 2000 Collection. Consulted January 10, 2003, from http://www.loc.gov/today/pr/2001/01-091.html

United States. Library of Congress. (2002). Metadata Object Description Schema (MODS) Official Web Site. Updated December 18, 2002. Consulted January 15, 2003, from http://www.loc.gov/standards/mods/

United States. Library of Congress. (2001). Library of Congress, Internet Archive, webarchivist.org and the Pew Internet & American Life Project Announce Sept. 11 Web Archive. Consulted January 10, 2003, from http://www.loc.gov/today/pr/2001/01-150.html