Nadia Arbach, Education Resources Manager, Moving Here, The National Archives; Martin Bazley, ICT4Learning; Nicky Boyd, Museum Learning and Evaluation Consultant, UK

http://www.movinghere.org.uk/schools/

Abstract

A user testing session for an on-line resource typically involves a user being observed (and possibly recorded) in a closed environment, guided by a tester through pre-set questions and assigned actions. The surroundings are not those in which users might normally use the Web site: this user is working alone. So what happens when a testing session is carried out in a classroom with a large number of people? Taking user testing into the user environment rather than bringing the user into closed surroundings allows spatial, emotional, and social issues to influence the way the Web site is used – and although the results may be more difficult to quantify, they may prove much more useful in our understanding of how the site will ultimately be used by its audiences. This paper examines user testing of on-line resources in a classroom setting, using as a case study the schools' e-learning resources developed for the Moving Here site (http://www.movinghere.org.uk). The Moving Here Schools evaluation programme involved teachers giving feedback about the site in various development stages and then participating with their students in a classroom testing stage. In classroom testing sessions, a team of evaluation consultants observed classes working through the site with their teacher and found that this type of testing was more effective in highlighting changes that should be made to the site. The paper will consider the expectations, challenges and opportunities associated with 'habitat testing' and suggest how museums can make this type of evaluation programme work in schools.

Keywords: e-learning, on-line learning, schools, research methods, evaluation, user studies

Introduction: Testing a Site in its 'Real Habitat'

Most on-line resource testing involves a potential user being observed in an environment chosen and closely controlled by an evaluator who guides the user through pre-set questions and assigned actions. Although this method of assessment can be useful in addressing top level issues and can give insight into some of the design, navigation and content changes that may need to be made, it does not replicate the conditions under which the Web site would normally be used.

In this paper we contend that the primary aim of testing Web sites for use in schools should be to capture feedback not only on the usability, overall design, content and other aspects of the Web site itself, but also on the ways in which the Web site supports, or hinders, enjoyment and learning on the part of teachers and students in a real classroom environment. A range of issues, including group dynamics in the classroom, teachers' prior experience of using an electronic whiteboard, their background knowledge of the subject, and other issues, can all have an impact on the overall experience. The feedback generated through 'real life testing' – or 'habitat testing' – is rich and highly informative for refining the site to improve the end user experience – and it need not be expensive or overly time consuming.

Our paper uses the Moving Here Schools site as a case study (http://www.movinghere.org.uk/schools). We will discuss the expectations, challenges and opportunities that arose during 25 in-class testing sessions undertaken as part of our evaluation programme. We also make suggestions about how this type of testing can enhance Web site evaluation programmes, not only with regard to gaining feedback on specific resources, but also as an awareness-raising experience for museum practitioners.

Fig 1: Teacher using Moving Here Schools with her class

The Moving Here Schools Site

The Moving Here site (http://www.movinghere.org.uk), launched in 2003, is the product of collaboration between 30 local, regional and national archives, museums and libraries across the UK, headed by the National Archives. The site explores, records and illustrates why people came to England over the last 200 years, and what their experiences were and continue to be. It holds a database of on-line versions of 200,000 original documents and images recording migration history, all free to access for personal and educational use. The documents include photographs, personal papers, government documents, maps, and images of art objects, as well as a collection of sound recordings and video clips, all accessible through a search facility. The site also includes a Migration Histories section focusing on four communities – Caribbean, Irish, Jewish and South Asian – as well as a gallery of selected images from the collection, a section about tracing family roots, and a Stories section allowing users to submit stories and photographs about their own experiences of migration to England. The site was funded by the BLF (Big Lottery Fund).

Fig 2: Home page of Moving Here Web site (http://www.movinghere.org.uk)

The Moving Here Schools site is a subsection of the greater Moving Here site, and was designed during a second phase (2005-07) of the Moving Here project. One aim of this phase is to ensure that stories of migration history are passed down to younger generations through schools. The Schools section therefore focuses on History, Citizenship and Geography for Key Stages 2 and 3 of the National Curriculum (ages 8 to 14), and includes four modules: The Victorians, Britain Since 1948, The Holocaust, and People and Places. Designed for use with an interactive whiteboard, the resources on Moving Here Schools include images and documents, audio and video clips, downloadable activity sheets, on-line interactive activities, a gallery of images, and links to stories of immigration experiences that have been collected by the Community Partnerships strand of the project. Funding for the Schools section is provided by HLF (Heritage Lottery Fund). The Schools site launches in March 2007 as a new section of the Moving Here site.

Fig 3: The Moving Here Schools home page (http://www.movinghere.org.uk/schools)

Moving Here Schools Evaluation Programme: A Two-Phase Approach

A comprehensive evaluation for Moving Here Schools was built into the project from the beginning, and was allocated £20,000 of the entire project's budget, which came to approximately 6.7 % of the £300,000 budget that was allotted to the Schools portion of the project (or 1.7 % of the total project budget of £1.2 million).

The evaluation programme included two distinct phases: a period of preliminary testing sessions, during which teachers participated in conventional user-testing, and a period of in-class testing, during which teachers used the Moving Here Schools site directly with their pupils in their own classrooms. In planning the evaluation process, the team felt that a combination of methodologies might produce more fruitful results than just one methodology alone. The same concept is suggested in Haley Goldman and Bendoly's study of heuristic evaluation used in tandem with other types of evaluation to test museum Web sites (2003).

Six teachers from the London area formed the evaluation team, selected and partially supported by the LGFL (London Grid for Learning). Four of them participated in both the preliminary testing sessions and the in-class sessions.

Early User Testing of the Draft Site: Teachers Felt They Were Listened To

Two preliminary testing sessions were held at the National Archives in February and June 2006 to review the first and second drafts of the Schools site. The Education Resources Manager led the sessions and two other members of the Moving Here team participated, mainly recording information. The user environment (the ICT suite at the National Archives), as well as the methodology, was based on conventional user testing, bringing the testers into a closed environment and observing them as they interacted with the draft site. For each of the two observation sessions, the teachers spent a full day looking at the draft versions of the module they had been assigned and commenting on navigation, design, subject coverage, style, tone and other elements of the site, observed by three Moving Here team members. They also participated in a group discussion at the end of the day. Their feedback was written into reports and used to make improvements to the site.

These preliminary testing sessions proved invaluable to improving the quality and usability of the site. Between the February session and the June session, major changes were made as a direct result of teacher feedback, substantially improving the site. The main changes made were to reorganize the materials into shorter, blockier lessons rather than longer, linear lessons; to merge two related lessons into one; to shorten most lessons and lesson pages; and to redevelop some of the interactive activity specifications.

Fig 4: The Moving Here Schools Web site in development

In the June session teachers noted that their input had been followed up and commented favourably upon the fact that their feedback had been used to improve the site (one teacher said that even though she had been asked for her opinion about Web sites before, she had never seen her suggestions put into practice before this particular project). After the June session, changes were again made to the site, including design modifications, changes to interactive activities that had already been programmed, and a few more changes (including additions) to content.

Although this amount of testing could be considered enough – especially since it yielded such fruitful results – Moving Here also included in-class testing as part of its programme. This approach incorporates the advantages of 'ordinary' user testing, but builds on it by taking account of the social dynamics and practical problems that influence the use of the site, so as to ensure the site is usable in the classroom – as opposed to ensuring that it is usable in a controlled user testing environment. This unique addition to the evaluation programme proved even more useful than the conventional user testing.

The In-Class Testing Programme: Building on Teacher Involvement

Two evaluation specialists (Martin Bazley and Nicky Boyd) were hired as the evaluation team to carry out the in-class testing programme. In classroom testing sessions, teachers were observed, at their schools, using the Moving Here site with their students. The schools included two primary schools in the borough of Newham, London, a secondary school in Bethnal Green, London, and a secondary school in Peckham, London – all neighbourhoods with culturally diverse communities, a high proportion of immigrants and a large number of people whose first language is not English.

Four of the original six teachers were involved, and the evaluation team went into their classrooms 25 times between October and December 2006 – 5 times per teacher, with one teacher doing a double load and testing two modules instead of a single one, for a total of 10 sessions. The teachers were paid £300 each for five sessions of in-class testing, working out at £60 per session. This closely follows the standard amount of between $50 and $100 US for one session, as suggested by Steve Krug in his seminal work on user testing, 'Don't Make Me Think!'(2000). The teacher who tested two modules received double payment. The total spent on teachers was £1500. The evaluation team was paid approximately £18,500 for the 5 sets of observations and session reports, plus a final report.

The evaluation team met with each of the participating teachers in advance to agree on which lessons to use for the in-classroom evaluation, to brief the teachers on what was required during the session and to agree on the procedure for arriving during the school day. In consultation with the Education Resources Manager, the evaluators produced an evaluation plan with a set of questions to ask every teacher to make sure they had covered each area and issues, plus an in-class observation checklist. The questions covered learning outcomes, tone, length of lesson, order of pages, images, design, navigation, accessibility, activity sheet issues, issues with interactives, children's engagement, and other improvements that teachers thought might be useful.

The teachers submitted lesson plans with intended learning outcomes for each session according to the National Curriculum. Immediately after each session a written session report was sent to the Education Resources Manager, who used the findings in each report to implement changes to the site while the series of observations was still going on. In some cases, changes requested by a teacher were in place in time for the next observation.

The Site Was Ready – But Not Too Ready

At the beginning of the testing period, the site was not in its finished form, with most pages still not laid out properly and therefore looking somewhat incomplete, and with some images and interactives not yet embedded or developed. From the testing point of view this is not ideal, since potential users cannot always envisage the completed Web site and are therefore liable to react slightly differently as a result (overall attractiveness of a site is an important factor in engaging an audience, especially most schoolchildren). At a project level, however, given that the aim of conducting user testing is to improve the site through making changes based on real feedback, it would not make sense to test a finished Web site. In this case, the balance was actually about right, and the additional benefit of being able to make changes on-the-fly after each session report made up for the site not being fully ready.

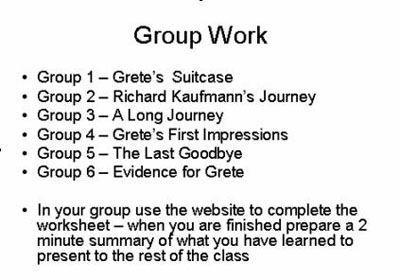

It was important for teachers to decide which units they were going to test in order to fit in with the school curriculum, so that the testing could benefit their pupils and warrant their own valuable teaching time. The evaluators felt that this helped the teachers feel more ownership of the process. To this end, teachers appreciated the 'ready-made' approach of the site, which is flexible enough to be adjusted by teachers for particular situations (learning needs, ability level, time available, equipment available, equipment working etc). There was some discussion within the project team and with teachers about whether to offer detailed lesson plans with specific timings, lists of resources, detailed curriculum links and so on. A couple of teachers said they thought detailed lesson plans might be useful for inexperienced teachers, but everyone said they would require significant changes for their situation anyway. The team decided to avoid provision of largely redundant text, and to offer instead a series of independent activities based on original sources, with suggestions of key questions and some worksheets.

Fig 5: A Powerpoint plan for one teacher's testing session

Teachers Rely on Their Whiteboards

The Moving Here Schools site was designed to be used with interactive whiteboards, and as such, these played a key role in every lesson. This was partly because the teachers prepared lessons using the Web site, but also because several of the teachers on the evaluation team were their school's ICT Coordinator, meaning that they were predisposed to use technology perhaps more often and more thoroughly than their colleagues might. All the teachers said they use the interactive whiteboard during almost every lesson.

The way teachers commonly deploy the Web site's resources on the whiteboard has important implications for the design of on-line resources for use in schools. In the case of Moving Here Schools, the whiteboard was used mainly at the beginning of the lesson to show a 'starter' image or activity, at the end of the lesson to show material reinforcing the main points covered, and to pull together responses in a 'plenary'.

Other technical tools were used in the classroom: one teacher used a digital slate that allowed her to write notes on her (standard) whiteboard from anywhere within the classroom. Another used a new resource that had just been added to her classroom: Activote technology, which is similar in nature to the 'voting' function on shows such as 'Who Wants to be a Millionaire?' The teacher using this technology found that it worked well with the whiteboard and added value to the material covered in the Moving Here Schools site.

Fig 6: Students sitting in front of the Whiteboard

Fig 7: Pupil using Activote technology

Laptops Can Be a Delight – Or Get in the Way

Where laptops were used, pupils seemed to enjoy being able to work individually through the interactive elements on their own laptops. However, practical barriers often arose relating to logging in, finding files on other drives, saving work, lack of relevant software or plug-ins or other technical difficulties, all of which interfered with the learning. Where laptops are wirelessly connected to the Internet, some children get distracted, visiting irrelevant or inappropriate Web sites. One teacher said explicitly that he did not like using the laptops, but had been instructed to do so by his school's Senior Management Team in order to fulfill ICT requirements.

Fig 8: Pupils using laptops

In the 'Real Habitat', Background Noise, Social Dynamics and Environment Make a Big Difference

The Environment

Besides the elements that originated from the site and its contents, the environment had a significant impact on how the site was used. For example, various disturbances in the classroom (excess noise, students coming in late, interruptions at the door, etc), as well as logistical issues (time taken to turn on laptops and log in, time taken to log in at the ICT suite, time taken to find the correct Web site and the page within the Web site, difficulties with saving documents to students' folders and printing them out) all affected how well students worked with the Web site. As an example, a class of year 7 students became overly excited when visiting the ICT suite for the first time with the teacher, could not concentrate on the activity because there were other classes in the ICT suite, and was unable to access a video because the school's firewall blocked it. This resulted in a relatively uneven learning experience, but also was instrumental in indicating to the evaluators which of the activities that had been attempted during that class were the most engaging, and would therefore be the most likely to hold students' attention during periods of high disturbance.

Fig 9: Students in ICT suite

Social Dynamics

Another important element that the evaluators were able to capture only in a classroom setting was how students worked with each other. The class dynamic within the different groups contributed to how much the students learned, and while this issue will affect not only the group's learning from a specific Web site but also how well they will learn in all of their classroom endeavours, it is important to note how it affected the testing. For example, some groups worked extremely well together on an activity sheet, but this may have been due not only to the intrinsic interest they took in the activity but also to external issues (threats of detention if they talked too much, possibility of bad marks if they did not complete the activity, incentive of a free lunch to the group that handed in the best activity sheet).

Interestingly, the evaluators' presence did not seem to distract students. Initially, the evaluators thought that their presence, even sitting discreetly at the back of the room, might cause students to react differently than they might normally have done. However, during the in-class testing sessions, evaluators found that their presence was either ignored or considered normal by the children. Reasons for this might be that students are accustomed to having more than one adult in the classroom at a time – teaching assistants might be a constant presence, other teachers might interrupt the class, and OFSTED inspectors (the Office for Standards in Education, the UK's official school inspection system) might visit classes to conduct inspections.

How In-Class Observation Scores Over Conventional User Testing

The in-class testing was useful in picking up elements that were not, and would not have been, flagged in the conventional user testing scenario. Even though the testers were the same teachers who had participated in the two preliminary user testing sessions, they did not pick up on some of the elements that needed to be fixed or changed until they were actually using the site in the classroom. It was only when practical implications became apparent that they noticed these items needed to be changed.

All of the following issues had been present during the conventional user testing sessions but had not been singled out as needing modification until the in-class testing sessions:

content: when they read the text out loud or asked students to read the text on the pages, teachers realized that the tone of some of the text was too difficult or complex, even though it had seemed fine when it was read on the screen

images: teachers realized that some of the images they had seen on lesson pages were not actually useful or pertinent to their teaching, and so should be removed or moved to different pages

activity sheets: activity sheets did not have spaces for students to put their names, which caused confusion when they were printing out their work – something teachers hadn't noticed when looking at the content of the sheets

interactive activities: although they took up a fairly large amount of screen space when they were being viewed by a single user on a single screen, interactive activities were too small for some children to see from the back of the class and needed to be expandable to full-screen size

navigation: the breadcrumb trail needed to go down one more level in order for teachers and students to immediately recognize where they were within the site

navigation: the previous/next buttons and the page numbers only appeared at the bottom of the screen, sometimes after the 'fold line', which made it difficult for users to know how to get from one page to the others

In fact, sometimes the issues that came up during classroom testing directly opposed what teachers had told us in initial user testing sessions. For example, during user testing teachers had said that the breadcrumb trails were easy to use and helped with navigation, but when they began using the site in the classroom, they found that this was not always the case. Teachers needed to try out the site with their pupils to see what really worked.

Engaging Pupils – What Worked Best?

Of the materials available in the Moving Here Schools site, the ones that engaged pupils most were images and interactives. Images inspired much discussion amongst the pupils; for example, one photograph of a young girl arriving at Harwich, Essex, after traveling on a Kindertransport train led to a group of girls discussing evacuation in detail and relating it to the children in the film The Chronicles of Narnia, which they had just seen at the cinema.

Interactives also worked well, and pupils became particularly engaged when interactives encouraged them to understand the real lives of people in certain situations. One activity in the Holocaust lesson prompted these comments:

"It's got lots of information – the thoughts of a Jew, it gives us a picture"

"It's taking you through the story – I like it"

"Tells you a lot of information in such a short piece of writing"

The teacher felt that "if you want them to read it you've got to [make it interactive] to encourage them to go through it".

It was also obvious that children enjoyed the activities that happened to relate to the cultural groups of their class demographic, and also the activities that had specific relevance to children. Teachers suggested ways of making some of the activities even more child-focused than they were already. For example, one teacher felt that a film of the factories in one of the Victorian lessons would be much more engaging if the clips focused specifically on the children in the factory.

Fig 10: An example of one of the interactive activities in the Moving Here Schools site

Addressing the Skeptics

'This classroom user testing is all very well, but...'

The following questions and answers address issues that may be of concern to those who have not conducted 'real habitat' testing before.

How can you see everything in a class of 30 children – don't you miss things?

With one-to-one observation you might get 'everything' – but that's quite simply because there's less happening – or, rather, different factors are important. The team's observations identified points for improvement that will have a major effect on successful use of the Web site in a classroom, which would simply not have arisen in one-to-one testing.

You didn't measure the learning impact, so how do you know it will improve learning?

This was a point the team considered early on in the development of the evaluation strategy. It is notoriously difficult to assess the learning outcomes arising from a given learning resource or situation, even with a useful framework such as Inspiring Learning for All (http://www.inspiringlearning.org.uk). One commonly agreed success factor, however, is the level of engagement of students (and the teacher). The team decided to monitor the degree of engagement while certain elements of the site were being used (taking into account any extraneous or other contributing factors in a way that is only possible while actually sitting with the class). At the end of each class the evaluators also asked each teacher to comment about the extent to which they felt the learning outcomes had been achieved (the team knew what these were for each lesson as the teacher had previously sent us the lesson plan). As anticipated, there was a strong correlation between these two measures, and each yielded very useful feedback.

The other justification for this approach is that it allows observers to see quite easily whether something is working or not, and a different set of usability issues, ones that can only be picked up by observing in a real classroom context, arise.

Doesn't using a specific class with particular needs skew the results?

In other words, if you are observing in a specific class with, say, low ability or poor levels of English, equipment not working, behaviour issues, and so on, how can you be sure your results are as reliable as those obtained in a 'neutral' environment?

First, there is no such thing as a neutral environment, and any test will be subjective, not least because of the particular interests and abilities of the subjects themselves. Secondly, and more importantly, the overall aim of this testing is to ensure the Web site works well in classrooms, and this means seeing the effect that factors like those mentioned above have on the way the Web site is used. Although ideally one would test in more than one classroom (as in this project), just one session in one classroom, however unique the setting might be, reveals more about the required changes than one session in a neutral environment, because the social dynamics and educational imperatives are simply not there to be observed in neutral surroundings.

How can I do this myself if I don't have an educational background?

It is true that deciding which aspects are important, and how to interpret them during observation, is best done based on knowledge of the way things work in schools. However, if the only affordable way of conducting user testing is by doing it yourself without an educational background, it is still worth doing, not just because of the rich feedback that will inform site improvements, but also for the insight it will offer you into the school environment. This sort of interaction also sometimes leads to future project ideas, closer relationships with local schools, etc. Indeed, it can be a good idea to sit in on one observation session to see how it works.

Can't my Web developer do the testing for us?

If you are using an external Web developer, it is probably best to avoid getting them to do the user testing, as there is an inherent conflict of interest leading to a likelihood of minimising the changes required, and also because they are likely to focus more on the technical aspects of the site than on its effect on the teacher and pupils themselves. For the same reason, it would be preferable to get an external or unrelated evaluator for the project rather than visit a classroom yourself if you are the producer or writer of the content. It is always difficult to take criticism and a neutral party will not have any issues surrounding ownership of the material.

There are, of course, exceptions to this generalisation. On this project, the Education Resources Manager attended five of the 25 classroom testing sessions – always accompanied by one of the other evaluators – and was able to suspend personal ownership of the site materials in order to acknowledge the teachers' and pupils' comments. In a similar project by LINE Communications Group to build learning resources about China, the producer and writer of a pilot project site attended eight classroom sessions herself as evaluator, even though she had prepared most of the material. When interviewed, she stated that her belief in the iterative process made it possible for her to suspend her own feelings about the site material in order for the users' comments to be received (Howe, 2007).

I don't have the time or budget to do this!

It's true that it can cost money to conduct user testing in a classroom – but then again, it need cost no more than conventional user testing. In conventional user testing, an acceptably budget-conscious way of conducting the testing is to have (preferably neutral) staff administer it, hand-writing the notes or using in-house recording equipment to record the user's experience, and to give the tester a small token of appreciation such as a gift voucher. In an in-class testing scenario, one person could attend a one-hour class session in a school, giving the teacher the same small token payment and taking notes or using recording equipment (taking care not to breach rules about the recording of children) to make notes of the issues uncovered.

The Moving Here Schools evaluation programme was built into the project plan, but still only used 6.7% of the total spending on the Schools site. The team would recommend spending between 5 and 10% of your total project budget on user testing – especially a combination of conventional and 'real habitat' testing – and planning it into your project from the start. When taking into account the cost of not conducting effective user testing, then the cost of user testing is usually worth every penny. If you can only afford one test, do one. Krug makes the point best when he says 'Testing one user is 100 percent better than testing none. Testing always works. Even the wrong test with the wrong user will show you things you can do to improve your site' (2000).

So what's the best way to go about it?

Unlike conventional user testing, in which users come in for one session and may not be acquainted with the testers at all, it is important to build relationships for in-class testing. For the Moving Here team, the key was to take the time to get to know the teachers before going into their classrooms. The trust that was built during the preliminary testing period – and especially the trust that was earned when teachers understood that the team was taking their suggestions into account – was one of the most important elements leading to successful in-class testing.

Although teachers are continually being observed and assessed, it is very important to reassure teacher-testers that you are looking at how they use the Web site in order to make improvements, not observing the quality of their teaching skills. The more relaxed the teacher is with you, the better the evaluations will be.

When you are planning in-class testing, it is important to decide in advance what aspects of the site are changeable (within realistic cost and time parameters) and what aspects can actually be usefully measured. When conducting observations, it is simply not possible to record everything, even with video recording, as every observation is necessarily selective and therefore subjective. Deciding what key points to draw from a particular observation – and acting on them as soon as possible – reduces the possibility of misinterpretation of a situation or issue at a later date.

Conclusions

The team felt that it was important for those involved in museum Web sites to engage with the personal experience of pupils and teachers as far as possible when developing resources for them. This approach greatly assisted in making the Moving Here Schools site usable and engaging for its audience. Whilst the improvements suggested during conventional testing were useful because they pointed out what teachers were expecting from the site, the changes made as a result of the in-class testing were even more effective because they ensured that the site is as usable as possible in real classroom situations.

As a result of the testing sessions, all of the teacher-testers said they would be much more likely to use the site again. Our conclusion is that where possible, it is best to evaluate a site in the situation it is designed for. We believe that for a site designed for schools, the most effective observations will be made in a classroom situation.

Acknowledgements

The Moving Here team would like to thank the teachers and students who allowed us to visit their classrooms and observe them using the Moving Here Schools site.

References

Haley Goldman, K. and L. Bendoly (2003). Investigating Heuristic Evaluation: A Case Study. In D. Bearman and J. Trant (eds), Museums and the Web 2003: Proceedings. Toronto: Archives & Museum Informatics, 2003. Last updated March 8, 2003, consulted January 26, 2007. http://www.archimuse.com/mw2003/papers/haley/haley.html

Howe, P. (2007). Personal interview conducted by Nadia Arbach. London: 16 January 2007.

Krug, S. (2000). Don't Make Me Think!: A Common Sense Approach to Web Usability. Berkeley, California: Circle.com Library, New Riders Publishing.

Cite as:

Arbach, N. et al., Bringing User Testing Into the Classroom: The Moving Here In-Class Evaluation Programme, in J. Trant and D. Bearman (eds.). Museums and the Web 2007: Proceedings, Toronto: Archives & Museum Informatics, published March 1, 2007 Consulted http://www.archimuse.com/mw2007/papers/arbach/arbach.html

Editorial Note