Franco Tecchia, Emanuele Ruffaldi, Antonio Frisoli, Massimo Bergamasco, Scuola Superiore S.Anna; Marcello Carrozzino, Institute for Advanced Studies, Italy

Abstract

The deployment of virtual expositions of accurately rendered, high quality 3D models over the Web is a common practice today in many cases of cultural heritage and arts initiatives. In the specific case of virtual museums, where the nature of the content opens possibilities that go beyond those of a single, localised physical exhibition, the availability of Web-ready content related to the project's collection of digital models becomes almost mandatory. Although desirable, presenting interactive content over the Web is not a simple task, and a large part of the current generation of 3D-Web technology, being tailor-made mainly for graphics-only applications, often imposes severe limitations on the possibilities for user interaction.

As a result of these technological limitations, the user expects of the virtual exhibition, and very little has been explored so far about the potential benefits that the sense of touch could introduce in the interaction paradigm. We report here the results obtained from mixing high performance 3D graphics with haptic interaction in the context of several museum installations, as well as presenting a new generation of Web technology that makes it possible to deploy this type of content on the Web.

Keywords: virtual environments, haptic interaction, 3D models, Web 3D, force-feedback

Introduction

Virtual Environment (VE) technology was originally developed within the field of Computer Graphics research, but today it has become a fully independent research topic. VEs are computer-generated, simulated environments, with which the human operator can interact through different sensory modalities.

Real-life applications for VEs emerge at an increasing pace, although still in very specialised contexts. There are some fields that have shown superior receptivity to VE concepts and techniques, such as the Industrial and Medical sectors, as well as the Entertainment and Gaming industries, but VE technologies are rapidly gaining traction in the fields of Art and Cultural Heritage as well, not only for reasons relating to the conservation and security of artifacts or for communication and educational purposes, but also for the creation of new forms of art and new paradigms of artwork creation by means of interaction.

Interacting with VEs requires setting up an interface layer between the human user and the Virtual Environment.

This interface layer can be subdivided into distinct channels, and VEs commonly provide feedback on the visual, acoustical, haptic and inertial channels, although other senses, like the olfactory channel, are currently under investigation (Chen 2006).

The Museum of the Pure Form

One of the most recent and interesting VE applications developed for the arts is the Museum of Pure Form (Bergamasco 1999), a system aiming to explore new paradigms of interaction with sculptural pieces of art.

Funded by the European Commission under the 5th Framework Programme "Digital Heritage and Cultural Content" key action area (IST 2000-29580), the Museum of Pure Form (MPF) aims at exploring new paradigms of interaction with sculptural pieces of art. In traditional museums visitors may only observe the exposed statues because, for security reasons, they are not allowed to touch them. On the other hand, haptic perception (Frisoli2005) represents the most immediate way of interacting with sculptures, allowing the observer to perceive the concept of space the artist has impressed on the sculpture while shaping it. In the perception of sculptural pieces of art, merely observing them by sight is a limit which prevents the observer from fully appreciating the artistic value and the intrinsic beauty of those art pieces. Moreover, any fruition of these artistic works is denied to blind and visually impaired users. Through Virtual Reality, the Museum of Pure Form offered art a way to go beyond such limits by giving the haptic perception of artistic forms the same essential role it had for the artist creating them.

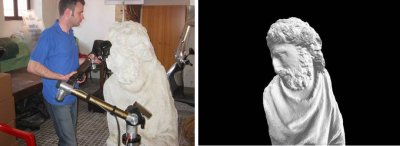

Fig 1: Examples of haptic interfaces used in the Pure Form project

The use of innovative technologies (Figure.1) allows users to perceive suitable tactile stimuli (Frisoli 04) to be able to simulate the hand while in contact with the digital copy of a real statue. In addition, the realism of the virtual simulation is increased when integrated with the stereoscopic visualization of digital models, giving users the real feeling of touching the surface being visualized in the space beneath their own hands.

Several museums were involved in the project. The Galician Centre for Contemporary Arts (CGAC) of Santiago de Compostela (Spain), the Museo dell'Opera del Duomo (OPAE) in Pisa (Italy), and the National Museum (NM) of Fine Arts of Stockholm (Sweden) actively participated in the project by hosting and organizing public temporary exhibitions. Other associate museums have contributed to enrich the digital collection of works of art, including the Conservation Centre at National Museums Liverpool (UK) and the Petrie Museum of Egyptian Archaeology, London (UK).

A selected set of sculptures belonging to the collections of partner museums has been digitally acquired to create a database of artwork copies that constitute the core of a new Web-based computer network among partner museums and other European cultural institutions. The Virtual Repository of sculptures includes works coming from Centro Galego de Arte Contemporanea (Santiago de Compostela - Spain), Opera Primaziale of Pisa (Italy), the National Museum of Fine Arts of Stockolm (Sweden) and the Petrie Museum of Egyptian Archeology of London (UK). Several sculptures, belonging to different historical periods, were digitized through laser scanning (Figure. 2), a procedure that acquires visual data related to a huge set of points on the sculpture.

Fig 2: Sculptures acquisition using 3D laser scanning

The "clouds" of points, deriving from the acquisition phase, undergoes an elaboration process (Desbrun, 2002) (Debevec, 1998) in order to obtain a graphical 3D reproduction of the real work of art, which is then post-processed to produce the final polygonal mesh (Figure 1 - right). The geometric complexity of the meshes is then reduced in order to make the 3D model suitable for real-time haptic and graphical rendering algorithms.

Technology Demonstration

The concepts of the Pure Form project were exposed in two ways. A temporary physical installation was presented to the public at the sites of the participating museums. There, users were able to interact with virtual sculptures by means of large haptic interfaces and stereoscopic visualization.

Secondly, a project Web site using advanced 3D technology was created (hosted at http:// www.pureform.org). There, all of the digitized sculptures from the various museums are accessible in a single, unified virtual exposition. A distinct feature of the Pure Form Web site is that haptic interaction, initially possible only at the physical Museums installations, has recently been added to the Web exposition as well.

Installations at the Museums

In the case of the physical expositions, two specifically devised haptic interfaces were validated during the project (Loscos 2004) and installed in temporary exhibitions at the partner museums, where the visitors were allowed to explore, both from a visual and haptic perspective, the digital shapes while immersed in the Virtual Environment. In these museum exhibitions the Virtual Environment simulation is displayed through a passive stereoscopic visualization screen with back projection and circular polarization (Figure 3). The projection screen is fixed through a frame and the haptic interface is placed in front of the screen. The complete installation is designed to be used by a single user because of the haptic interaction but, due to the dimensions of the screen, it is also possible for an external audience wearing polarized glasses to watch the virtual tour of the museum.

Fig 3: An example of Pure Form physical installation

The Museum of Pure Form has had a number of deployments in cultural heritage institutions across Europe, and more than 1600 users have explored the potential of haptic interfaces for engagement with artworks.

The Project Web Site

The Web site of the Museum of Pure Form, together with a classical description of the project, offers also an interactive modality of 3D exploration under the section "The Virtual Gallery" (Figure 4 - left), via an ActiveX Control for browsers exploiting the XVR technology (see next paragraph). In this section users can access a reduced complexity version of the same application hosted in museum installations, where the 3D models have a slightly lower level of detail, in order to keep the data size within reasonable limits to reduce bandwidth requirements. The former version of the Web site narrowed the interaction to the possibility of choosing from a list of icons the sculpture to be explored and allowed limited free camera movements by means of mouse and keyboard. Another limitation, compared with the museum installations, is the lack of stereoscopic features.

Fig 4: The Pure Form Web site supports haptic interaction

Recently the Virtual Gallery section of the Web site has been updated, including the addition of advanced interaction functionality by means of haptic feedback (Figure 4 - right). It has, therefore, gone from being a simple demonstrator of the visual achievements of the project to becoming itself an integral part of the project technology. In fact, it can be considered a distributed instalation that enables users in scattered locations around the world to experience the added value of such a museum, which, without doubt, is the haptic exploration of digital sculptures.

Innovative 3D Technology

Both the physical installation and the Web site of the Pure Form project are based on a software technology for Virtual Environments called XVR (Carrozzino 2005).

Composed of a set of high performance modules, XVR is a software technology for the rapid prototyping of Web-based VR applications. It integrates in a single framework a flexible and powerful 3D scene-graph, support for 3D Audio and sound effects, user input handling, real-time physics, and network communication support.

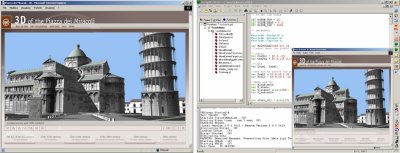

Fig 5: Web3D development using the XVR technology

Originally created for the development of Web-enabled virtual reality applications, XVR has evolved in recent years into an all-around technology (Figure 5) for interactive applications. Beside Web 3D content management, XVR now supports a wide range of VR devices (such as trackers, 3D mice, motion capture devices, stereo projection systems and HMDs) and uses a state-of-the-art graphics engine for real-time visualization of complex three-dimensional models; it is perfectly adequate even for advanced off-line VR installations. XVR applications are developed using a dedicated scripting language whose constructs and commands are targeted to VR, and gives the possibility to developers to deal with 3D animation, positional sounds effect, audio and video streaming and 6DOF user interaction. Currently XVR is distributed as free software. More information on this technology can be found at http://wiki.vrmedia.it.

Run-Time Execution Of XVR Contents

In its current form XVR is an ActiveX component running on the various Windows platforms, and can be embedded in several container applications, including the Web browser Internet Explorer .

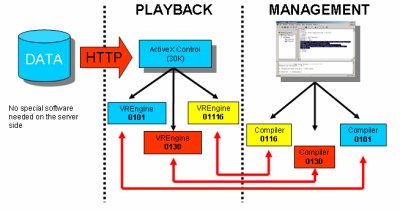

XVR (Figure 6) is actually divided into two main modules: the ActiveX Control module (weighting about 30Kbytes), which hosts the very basic components of the technology like the versioning check and the plug-in interfaces, and the XVR Virtual Machine (VM) module (currently around 550Kbytes), which contains the core of the technology, such as the 3D Graphics engine, the Multimedia engine and all the software modules managing the other built-in XVR features.

Fig 6: XVR functional scheme

Depending on the application environment it is also possible to load additional modules that offer advanced functionalities, such as the support of VR devices like trackers and gloves, as we decided to keep them separated so that Web applications (which usually do not need any of these advanced features) are not afflicted by additional downloading times. The XVR-VM, like many other VMs, contains a set of bytecode instructions, a set of registers, a stack and an area for storing methods. The XVR Scripting Language (S3D) allows specifying the behaviour of the application, providing the basic language functionalities and the VR-related methods, available as functions or classes. The script is then compiled in a bytecode which is processed and executed by the XVR-VM.

The download mechanism initiated by accessing a Web page hosting an XVR application is somewhat typical: after version checking and an optional VM updating, the bytecode of the application is downloaded and, subsequently, so is the first batch of data files related to the application, such as 3d models, textures, sounds etc. Immediately after this initial download, all the necessary initializations are performed and the application starts executing the various loops.

Enabling Haptics on the Web

Haptic interfaces have existed for more than a decade, but only recently a substantial decrease in price has allowed a strong diffusion of these devices, and today advances in hardware and software technologies have opened new and exciting opportunities for the creation of haptic enabled multi-modal systems.

By default, XVR does not offer support for the control of haptic devices, and this is introduced by means of an optional plug-in named HapticWeb(Ruffaldi2006).

In the context of XVR, HapticWeb provides the ability to program and control a variety of force-feedback devices, ranging from commercial devices such as the Sensable Phantom (Massie 94) to research-level devices such as the GRAB interface (Avizzano 2003).

In fact, the support of multiple haptic devices is one of the fundamental requirements for the spreading of a haptic application framework. HapticWeb provides support for a variety of haptic devices, and identifies them using a unique ‘device URL’. A device URL specifies the type of device, its identification if there are many of them, and optional parameters expressed using the query part of the URL. Eventually, if there is no haptic device attached, a virtual interaction device can be simulated using a common mouse.

The force-feedback effects that HapticWeb provides to the developer can be subdivided into generic ‘force fields’ and ‘constraints’ control. The first group includes simple effects like spring-effects, virtual plane, friction or a constant force associated to a certain bounding volume.

Constraints can be described using a set of points, lines and triangles that specify attraction geometry for the haptic point of contact.

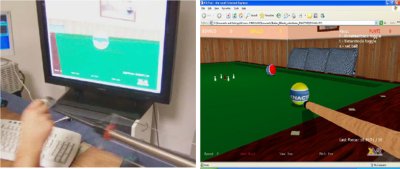

The use of HapticWeb is obviously not confined to the aims of the Pure Form project. Figure 7 shows Haptic Pool, a 3D Web application that lets the user enjoy playing Billiards using a haptic interface. In this case, the haptic device is used to impart force and direction to the ball in addition to changing the player’s point of view, using direct rendering of the forces.

Fig 7: Using HapticWeb to add force-feedback to a Web-based simulation of the pool game

Conclusions

This paper summarises our experience in using Virtual Reality, and notably haptic interaction, in the context of the fruition of art and cultural heritage. As a tangible example, we presented the Museum of Pure Form, a virtual museum where users are allowed to interact with digital copies of real sculptures both with the sense of sight and the sense of touch. The MPF is currently hosted in permanent museum installations and also in a Web installation, accessible with a common browser by means of the XVR and HapticWeb technologies which allow access to haptic-enabled 3D interactive content with a wide range of commercial and custom devices. We believe that there is great potential for the use of haptics on the Web, as it allows new interaction paradigms potentially useful for the general audience and in particular for users with special needs.

Acknowledgments

The Museum of Pure Form has been realized in the context of an RTD project funded by the European Commission, under the 5th Framework Programme (IST 2000-29580), involving a consortium composed of PERCRO Scuola Superiore Sant'Anna (Italy), University College of London (UK), Uppsala University (Sweden), 3DScanners (UK), Pont-Tech (Italy), CGAC (Spain) and Opera Primaziale Pisana (Italy).

References

Avizzano, C., T. Gutierrez, S. Casado, B. Gallagher, M. Magennis, J. Wood, K. Gladstone, H. Graupp, J.A. Munoz, E.F.C. Arias, F. Slevin(2003). “GRAB: Computer graphics access for blind people through a haptic and audio virtual environment”. Proceedings of Eurohaptics, 2003.

Bergamasco, M. (1999). “Le Musee del Formes Pures”. 8th IEEE International Workshop on Robot and Human Interaction RO-MAN,1999.

Carrozzino, M., F. Tecchia, S. Bacinelli, M. Bergamasco (2005). “Lowering the Development Time of Multimodal Interactive Application: The Real-life Experience of the XVR project”. Proceedings of ACM SIGCHI International Conference on Advances in Computer Entertainment Technology ACE 2005.

Chen, Y. (2006). “Olfactory Display: Development and Application in Virtual Reality Therapy”. 16th International Conference on Artificial Reality and Telexistence - Workshops (ICAT'06), pp. 580-584.

Debevec, P. (1998). “Rendering Synthetic Objects into Real Scenes: Bridging Traditional and Image-based Graphics with Global Illumination and Image-based Graphics with Global Illumination and High Dynamic Range Photography”. Proc. SIGGRAPH 98, pp. 189-198.

Desbrun, M., M. Meyer, P. Alliez (2002). “Intrinsic Parameterizations of Surface Meshes”. Eurographics 2002 Conference Proceedings.

Frisoli, A., F. Barbagli, S.L. Wu, E. Ruffaldi, M. Bergamasco and J.K. Salisbury (2004). “Evaluation of multipoint contact interfaces in haptic perception of shapes”. Symposium of Multipoint Interaction IEEE ICRA 2004.

Frisoli, A., G. Jansson, M. Bergamasco, C. Loscos (2005). “Evaluation of the Pure-Form Haptic Displays Used for Exploration of Works of Art at Museums”. WorldHaptics Conference, Pisa, Italy.

Loscos, C., F. Tecchia, A. Frisoli, M. Carrozzino, H. Ritter Widenfeld, D. Swapp, and M. Bergamasco (2004). “The Museum of Pure Form: touching real statues in an immersive virtual museum”. Proceedings of VAST 2004, The Eurographics Association. The 5th International Symposium on Virtual Reality, Archeology and Culture.

Massie, T.H., and J.K. Salisbury (1994). “The PHANToM Haptic Interface: A Device for Probing Virtual Objects”. Annual Symposium on Haptic Interfaces for Virtual Reality , 1994.

Ruffaldi, E., A. Frisoli, C. Gottlieb, F. Tecchia and M. Bergamasco (2006). “A Haptic toolkit for the development of immersive and Web enabled games”. ACM Symposium on Virtual Reality Software and Technology (VRST), 2006.

Cite as:

Tecchia, F., et al., Multimodal Interaction For The Web, in J. Trant and D. Bearman (eds.). Museums and the Web 2007: Proceedings, Toronto: Archives & Museum Informatics, published March 1, 2007 Consulted http://www.archimuse.com/mw2007/papers/tecchia/tecchia.html

Editorial Note