Introduction

The Jewish Women’s Archive (JWA), founded in November 1995, grew out of the recognition that neither the historical record preserved in America’s Jewish archives nor that preserved in its women’s archives accurately or adequately represented the complexity of Jewish women’s lives and experiences over the three centuries in which they had been making history in the United States (JWA, 2006).

The Archive’s mission is to “uncover, chronicle, and transmit the rich history of American Jewish women.” That includes a commitment to documenting Jewish women from all walks of life, and to reaching out to the general public, as well as to scholars.

In the 13 years since its founding, the Jewish Women's Archive has conducted interviews with, and collected materials about, hundreds of American Jewish women. These oral histories represent a sustained effort to begin filling in gaps in our understanding by restoring women's voices and experiences to the historical record. They are one small part of a larger effort, undertaken by academic and community historians alike, to ensure that women are visible in the pages of history books, archival collections, and communal narratives.

As an on-line-only archive, we initially viewed these oral histories as raw materials from which clips would be presented on-line, or from which on-line exhibit texts would be distilled. As a very small organization, it never seemed cost-reasonable to expend precious resources worrying about the initial interviews, and the likely audience for the interviews, beyond podcast clips, seemed quite limited.

It is still not clear that there is a large audience for the original interviews, transcribed or not. What is clear is that some of the interviews need active preservation work. Cassettes die. MiniDisks become unreadable.

We also feel that better tools for engaging with these interviews are near. We can see better tools for searching and accessing audio and video on the horizon. If these materials are on the Web, then it is possible the oral histories which we have so painstakingly gathered will find their audience. In the meantime, we needed to ensure that the recordings remained accessible and usable for the long term.

Preservation 101

There are many ways to physically preserve audio and video. The simplest, and the one that prevents further degradation to the media, is to digitize the recordings, and then to ensure that those digitizations are preserved. In addition to the digitization, museums must also ensure that the maximum useful metadata is captured and preserved: When/where was a recording made? On what medium? Who were the interviewer and interviewee? What subjects were discussed? What locations? What time periods? Who owns the recording? What rights and permissions have been secured to use the recording in new ways? Once the recording is digitized, the process - all preservation activity - needs to be documented. The digitized files are each assigned a unique signature (commonly, a “checksum”) which will be generated anew periodically and compared to the original to confirm that the recording has neither been altered, nor suffered “bit rot.” This data has to be attached to the recording and needs to be preserved along with it.

By the time beginning preservation activities began, the Jewish Women’s Archive had accumulated 6TB of Oral Histories. In 2007-2008 year we downloaded the entire collection of cassette tapes, minidisks, VHS video and miniDV tapes to an internal RAID Server and began capturing various types of metadata in a series of spreadsheets. Long-term, our goal was to move all of this data to a digital repository, and to provide variegated access to those assets (different interfaces depending on role, differentiating exhibits, etc.). In the meantime, we now had one set of digitizations, and their accompanying spreadsheets, on a relatively robust single RAID server.

As anyone who has been responsible for corporate backup knows, it is vital that all assets be backed up offsite, and in best case, replicated to an entirely different geographic area. This helps ensure their recovery not just from bad disks, but also from a local fire, theft, or natural disaster. We had talked for some time about partnering with similar organizations in other parts of the country or the world. Together, we could back up each other’s data over the Internet (or simply by shipping drives back and forth). The problem, of course, is finding other institutions with IT departments sufficiently far-sighted and robust that they could support such an effort with us, and who agreed with us that this was a reasonable long-term preservation plan, and could sell the extra storage cost to their boards.

We considered other backup options. Tape backup can be used to get the data offsite easily. On the other hand, tape requires frequent testing (drives go bad and begin backing up bogus data, or cease to read older data). In addition, local tape collections don’t provide the geographic balance needed for curators to sleep easily at night. As data security people are fond of pointing out, one does not begin to feel secure about data until it is replicated in at least three geographically disparate regions such that the likelihood of catastrophic failure and inability to recover drops close to zero. To back up our archive to tape, for such quantities of data, would be prohibitively expensive and tedious, and we would still have all the disadvantages of tape to deal with. We concluded that tape is the wrong technology for preserving largely static data, and in today’s world, tape technologies changes so quickly that it cannot be considered a comfortable long-term solution.

Many organizations use optical media instead of tape: CD-ROMs and DVDs. In the best circumstances, these devices can easily last 20-30 years (which may turn out to be longer than we have devices that can read the disks). Unfortunately, as we polled archives using optical media we discovered that, regardless of brand, quality, batch, or other predictor, some optical media would die, unpredictably, starting from the moment we tried to burn the backup (or before). This meant that those institutions using optical media had to rely on both LOCKSS (Lots Of Copies Keeps Stuff Safe) and frequent checks of each disk. We have no budget to check all of our off-site optical media every six months or so. This would be even more resource-intensive and nerve-wracking than using tape.

Enter “The Cloud”

The only current reasonably robust method of preservation and backup seems to be to have those geographically separated RAID servers or equivalent. Faced with this challenge, we considered a new alternative based on using Amazon Web Services (AWS). The service had been recommended to us over two years ago. Early users seemed happy. The service seemed reliable. I found myself running the usual small archive evaluation: we have no dedicated IT staff, no in-house expertise. Given the choice, I do not want to put my IT money into developing such local expertise - that is money that could be used directly on gathering Oral histories and processing them - things that nobody else can do for us. In such a situation, the question isn’t whether I feel that our data on a foreign system is totally secure, but whether it is more likely to be secure (both in the sense of being inaccessible to crackers, and in the sense of being there, unchanged, when we want it) on AWS or in our shop. Who is more likely to lose our data? The folks at AWS, or we ourselves?

The economics were especially interesting. In year one, we purchased our RAID server and installed a T1 line. As we began moving the data to AWS, our AWS charges grew slowly (the service meters both the bandwidth required to add data, and monthly storage charges). By the time most of the material is on AWS, we will be dealing with another year’s budget and the RAID server will have been paid for in the previous budget.

By loading our data slowly, we get to also take advantage of the fact that ISPs tend to want a minimum 1-year contract for services. By that math, the minimum speed (T1, in our case) that gets the job done in a little less than a year is perfect. There remains risk that catastrophy will strike before the backup is done, but taking a year to add an offsite backup affordably is significantly more secure than not backing up offsite because it is unaffordable. As noted, we also take advantage of the likelihood that Amazon will do a much better, consistent-over-the-long-term job of ensuring that our data are secure than we are likely to do locally. They have full-time engineers to maintain their system and an SLA for uptime. We have an outsourced IT guy who comes in as needed, and who monitors systems from his office. Note that at this time, neither AWS nor we here at the office have a guarantee against data loss. All we have is the likelihood that two disparate, geographically separated systems won’t go bad in the same way at the same time. This is significantly better than one RAID array or closet of optical media in one location. [A joint project by Fedora Commons and the DSpace Foundation, DuraSpace, is currently looking at using the cloud to provide geographically-distanced replication and an SLA that covers data loss. (Morris 2008).]

Content Management, Digital Asset Management, and Digital Preservation

So far, we have talked about the crudest form of digital preservation - getting data off of old analog devices into digital form, and then making physical backups. In passing, I have also noted that there are several types of metadata - information about the information we are preserving - that we try to capture.

The software to help manage these assets is commonly called a Digital Asset Management System, or DAMS. Our starter DAMS consisted of lots of paper, Excel spreadsheets, and a RAID server. It worked quite well up to a point, always assuming that our Digital Archivist never left and took her memory with her. It didn’t scale particularly well. She spent a lot of her time tracking the spreadsheets, backing them up, double-checking one spreadsheet relative to the next, fixing typos, and finding mis-filed files.

We tested, and continue to test, open source DAMS solutions such as Archivists Toolkit and OpenCollection (some of whose developers are now working on CollectionSpace). I should further note that Digital Asset Management over time is Digital Preservation, and our concerns had more to do with rights management and digital preservation than many of the usual DAMS concerns: we don’t have digital content that we own or license, for instance. We have no reproduction issues. We do have assets that are private - accessible only to scholars. We also have Web site assets that were licensed for specific exhibit uses and whose usage rights we cannot extend to others. Not only do privacy and rights have to be tracked, but also any system we implement has to support enforcing those rights.

We did, at this point, have a new Content Management System (CMS), Drupal. But it is important to note the obvious: Content Management Systems are definitively not Digital Asset Management systems. For starters, nobody uploads master TIFF images to a CMS - they would never be displayed on the Web, and would simply chew up disk space to no purpose. It is actually common for DAMS to include CMS modules where all of the Web site content can be maintained for editing by editorial, marketing, and professional staff. Content Management is exactly what a CMS is good for.

Drupal

We had a somewhat more complex situation than is addressed by the average CMS. Starting about three years ago, we began to extract our Web assets from a decade’s worth of custom, proprietary systems and static HTML. It was clear to us that we did not want, again, to have our primary data siloed and inaccessible. Nor, once we had finished the migration, did we ever again want to have to maintain a panoply of separate databases ranging from obsolete Oracle 9/JSP pages to custom-built MySQL/PHP engines so obscure that we lack the time and expertise to consider bug fixes, as we move from ISP to ISP, or upgrade from server to server. We wanted to focus on the information, itself, and maintenance of the data, not the data infrastructure. We do not have the resources to maintain siloes – that is money stolen from gathering new oral histories and creating new educational materials, exhibits, and features.

From our perspective, we are also not in the business of gathering information that can sit waiting to be found - in this regard, we are entirely an untraditional archive (except insofar as many traditional archives are changing in similar directions). We want these stories used in teaching, we want people engaged with this information, and we want it re-used in telling new stories. Further, this is an age when people can contribute to the archive directly. The same tools that enable engagement and re-use need to be enabled to receive archival materials from our public, so not only does our CMS need to manage editorial content, but it also needs to come with some tools that do generally appear in a DAMS, starting with the ability to accept content on-line.

This requirement refers not just to the usual Web 2.0 comment and tag features, or to social networking tools. We are already into our second “on-line collecting” project where people can contribute items to the archive themselves. As I discuss below, this provided a first incentive to customize the CMS with some of the same features expected in a DAMS - an ability to parse uploads and to extract basic technical metdata (filetype, checksum, etc.) plus the ability to store descriptive metadata (contributed by whom, described how, etc.).

When we chose our CMS, we had a preference for open source software. In theory, and occasionally in practice, we are happy to work with proprietary commercial applications. In our office, people use Microsoft Office, rather than OpenOffice. They hate Google Apps. Since we can hire almost anybody and know that they understand MS Office well enough to work here, and since existing staff like it, we’re happy to go proprietary in this one instance. We also use commercial bug-tracking software, FogBugz, because that is the product that everyone liked enough to actually use. Having bug-tracking software that people actually use matters a lot. But, whenever all things are equal, and especially in the case of Web tools, our experience weights choices heavily in the direction of well-known open source applications.

There are several popular CMSs. When we selected Drupal, we felt that it was the most flexible among those that were sufficiently popular and sufficiently well-developed to be likely to continue to develop over time. We also wanted a CMS that relied primarily on MySQL and PHP, as it is relatively easy to find reasonably good and relatively inexpensive programmers who know both tools well. (Since these are “gateway” skills - the first language/database that many autodidactic programmers pick up, - there are also a plethora of people with these skillsets on their resumes whom one would not wish to hire.)

Drupal is not the only good CMS, but it has been an excellent choice for us. We continue to be quite happy with the developer community, the tools, and the direction (Semantic Web tools coming in the next major release) the code is moving.

When we decided to work with Fedora (see below), it was also in the back of our minds that the University of Prince Edward Island (UPEI) had already developed a module for Drupal to enable it to talk to Fedora (Islandora 2008). As it turned out, UPEI is working with versions of Drupal and Fedora slightly out of sync with what we are doing. This is not bad at this stage of development. For the CMS side of DAM and Digital Preservation, that just means that ultimately objects will physically be stored and maintained in the Fedora repository, rather than in Drupal’s own environment. Drupal’s digital asset tools are no more advanced than one might expect in the CMS. We will not be sorry to make that transition when it comes.

In the meantime, we have two important goals, and are also developing an important “connector”.

First, we must import legacy Web code into Drupal. This is a relatively standard headache involving generations of dissimilar static HTML and old Web applications using a number of databases and templating codes. It takes time, and each month more of that content is served from Drupal.

Second, we are developing tools in Drupal that “think” the Fedora way. In particular, we have modified some Drupal modules so that editors and content maintainers can treat all media objects - video, audio, images, so far - as “digital stuff” without having to learn the HTML code, or to use different modules and procedures to handle each type of object. In Fedora terminology, we have created standard “disseminators” that can be activated automatically depending on the type of object and the context. This means, for instance, that an image will be displayed at a standard small size when a “thumbnail” is required; at a different size when part of a story; and even at a third, large size, when someone then clicks on the image, in the story.

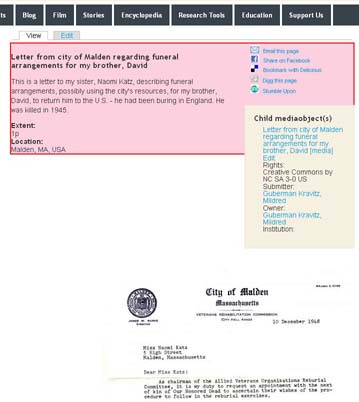

Fig 2: Admin Entryway to JWA’s WWII On-line Collecting Project, based in Drupal

The same Dublin Core-based metadata is used as we are using in Fedora.

One of the features that makes Drupal so amenable to modeling different types of data is something called “Content Construction Kit” (CCK). This module provides the tools so that instead of having the fields relevant to a particular type of page defined for you, you define your content types according to the needs of the data. Less specialized Web site owners might create specialized content types good for loading restaurant reviews or contact information. In our case, we used CCK to model the same fields for digital objects as we used in Fedora. As is the case with our Fedora installation, as we become more experienced and as our metadata become more complex, it is relatively trivial to extend the CCK definition.

Choosing Fedora, our Repository Framework and DAMS

We spent over a year exploring potential repository frameworks. Being situated just across the river from Tufts University (an original Fedora site) and MIT (home to DSpace) gave us a wealth of local expertise to draw on. We liked the instant usability of DSpace, but kept running into problems unique to JWA. Most of our assets are not simple objects such as images, text, or even simple audio and video. We have those assets, but they usually need to be archived and maintained in more complex fashion. An “oral history,” for instance, at JWA consists of several related elements. At a minimum, these include:

- Original audio (usually, a WAV wrapper at the highest resolution available form the source)

- Presentation audio (mp3)

- Original transcript (currently, Word docs)

- Presentation transcript (PDF)

- Metadata (various xml files, including technical metadata provided by the New Zealand Metadata Extraction Tool, NLNZ 2003)

Fig 4: Contents of a typical oral history

The issue here isn’t how to archive wav, doc, mp3, pdf, and xml files; rather, in this case, sanity dictates that we treat the group of files as one working object. Starting two years ago, we had surveyed the field of both open source and commercial systems. Last year we narrowed the choice down to DSpace and Fedora. Ultimately, the complexity of our content models and the perceived need for a rich set of relationships (RDF “triples”) led us to the flexibility and complexity of Fedora.

Intriguingly, Fedora fit our plans in another way. I mentioned earlier that it is increasingly true that if one starts with a repository, adding a CMS to manage Web content can be simple. After all, one of the main things a digital repository does is provide the ability to manage digital assets over time. In the case of Fedora (and to a less extent, DSpace), there is no useful built-in CMS.

We had already started migrating our Web assets into Drupal. When we heard that the University of Prince Edward Island was already at work on a Drupal/Fedora interface, we felt confident that this was a match made in open source heaven. To be sure, we surveyed the field and considered tools such as Fez and Muradora. At their current stages of development, they were not tools that we wanted to live with, and they are not tools that support traditional CMS roles - the ability to generate newsfeeds; use fancy templates to entice Web visitors to explore further; and to customize the look and feel of specific exhibits.

Setting the Stage: Backing up to ‘the Cloud’ in Agile Steps

So, we knew that we wanted to set up a Fedora Commons repository if we could find a few hundred thousand dollars in grant money or contributions. We were using Drupal as our CMS, and it was clear that ultimately we wanted all or most of the front end services to be handled by Drupal. Our Drupal development environment was a relatively traditional one with local “sandboxes” on people’s desktop machines, plus virtualized dev, qa, and production installations maintained on a couple of co-located servers at a traditional ISP. As recently as three or four years ago, we would have been paying rent on several physical servers, but with modern virtualization software, there was no reason not to keep adding “virtual” servers to the same box until there were load issues. The same development server could happily host “newsearch.dev.jwa.org,” “drupal.dev.jwa.org,” along with the mailing lists, bug-tracking software, database servers, etc. It was a very short leap from our virtualized servers at a traditional ISP to Amazon Web Services.

In the meantime, one last technology appeared, “Agile Development.” The problems we were trying to solve were common: with one on-staff developer, it was taking forever to go from “concept” to usable tools, and by the time we got there, priorities had changed. Agile development methods focus on short (< 6 weeks, typically) development cycles, with the intent that tested code with usable features is put into people’s hands quickly. Then, the next cycle of development is informed not only by what seemed important at the beginning of the project, but also by actual use of those initial tools and the shifts in priority and refinement that hands-on use provides. Use of these short cycles also meant that our developer could add functionality in several areas during the course of the year, instead of in only one or two key areas, making it easier for our Web site to develop based on how it was being used, or in near-real-time based on new ideas, developments, or examples from outside our site. Not only did we get faster development (albeit, in smaller chunks), but the ability to respond quickly helped get staff more involved in the growth and change of the Web site.

Staff took more ownership when they could see the changes they had requested take place almost in real-time. The methodology put our Web developer through some significant changes. Part of what makes him a great developer is the ability to take “as long as it takes” to solve problems well. Now we were telling him that every 4-6 weeks the development cycle would close down. He was free to tell us what he couldn’t get done in that time, but he had to deliver usable code at the end of each cycle. There was initial resistance, but I think that he is also enjoying the cycles now. He gets more variety, and he knows that he doesn’t have to solve everything now. He has to solve enough so that staff can get their hands on a new iteration. There will always be more iterations - new chances to rethink, refactor, improve, and move on.

A lightweight Fedora installation ‘in the cloud’

The pieces were in place. We were gaining more experience with Drupal by the day, and it gave every indication (and continues to give such indication as I write this paper) that it will make a delightful, flexible front-end to Fedora. We were starting to back up our data to AWS. We still needed Digital Asset Management in the here and now.

We began mooting the idea of a “simplest Fedora installation” to friends at The MediaShelf with whom we had consulted earlier about setting up the large Fedora installation. They were early proponents of Agile development to us, so it made sense to rethink what we needed.

Suppose we stripped out almost everything and focused on an admin-only interface? Suppose we focused on one content model: our oral histories that constituted the archive’s main digital asset? Could this be done for under $50k? Would it be enough so that the preservation work that we had begun last year by digitizing all of those tapes and other media could continue with tools more sophisticated than our growing panoply of spreadsheets? If this were possible, that would also mean that these assets were, in fact, on-line and accessible. If we had to, that would mean that we could now offer scholars access to interviews via the Web, even without the fancy DAMS of our dreams.

We quickly modeled a three-stage Agile development project and began work. We cut about $15k out of the budget by using AWS as the server host, obviating the need to purchase large servers and an extra RAID array upfront. We didn’t even have to budget colo fees for new servers.

We had met one of the principals of The MediaShelf in an earlier project, so we elected to save even more money by foregoing face-to-face meetings and using Skype instead. (Our preference, by the way, was not to use video in Skype meetings. While it is good to see the face of someone with whom you are talking, it is also convenient to be able to roam the room - or the office - without a camera noticing when one is or isn’t right in front of the computer. Skype turned out to have several advantages over traditional conference calls, including the ability to conduct back-channel “chat” to pass URLs and carry on side-conversations, as necessary. It also comes with a nicely secure tool to pass things like SSH keys.

Development on AWS is simple, but it is also different from traditional development. Things fit together differently. In a traditional server environment, users purchase (or rent, via colocation) a server with a certain amount of speed, RAM, and hard disk space. This can be very frustrating because you are paying up front for speed and space that you estimate that you might need before it is time to migrate to the next generation server. If you guess wrong, it is expensive, and costs a lot of time to migrate. AWS obviates that. You start small, with what you need to develop, and you can add new capabilities - even new servers - in minutes, not days.

With AWS, the first difference is that you have absolutely no idea where your data are - there is no longer a specified physical server tied to your Web services. There is no cage at an ISP somewhere to visit. We used three principal services:

- S3: Simple Storage Service - this is the AWS equivalent of a network server. Our original backup plan was to use a simple utility to pile everything here, and retrieve if/when necessary.

Fig 5: Control panel for Amazon Web Services

- EC2: Elastic Compute Cloud - click a button and add extra servers, bandwidth as needed. This is as close to metered server power as we get right now. Fedora is running on EC2

- EBS: Elastic Block Store - this is the equivalent of “ghost” for your server setup. The same EBS setup can be used to drive any number of servers, making provisioning those servers very fast. Even in the traditional dev/production environment, it becomes very inexpensive to modify a copy of the core setup: try it, and if it doesn’t work, remove it and start over with a new copy.

Working with The MediaShelf was a pleasure. There were more bugs than expected and the project was not completed in the originally projected two month span. Even Agile development can be imperfectly predictable. At one point we discovered that Fedora 3 (F3) choked on large-ish files. There was a digression while The MediaShelf folks fixed F3, checked it in to Fedora Commons, and then resumed work on our installation. To us, the ability to work in this fashion (immediately fix the bugs and check them in to the main code repository vs. wait for the next release of a commercial application, which might or might not address our issues) is also one of the reasons we treasure open source development. The resulting application (just now in the final bugfix phase) has the double advantage that it has a well-constructed user interface (UI) and works exactly as our Digital Archivist specified.

Fig 6: Entryway to the JWA Digital Repository

The overall process involves using a standard secure protocol (SFTP) to move files, batched, to AWS to the “watch folder”. For audio files, we zip everything into one large file which is automatically ingested. Once the message is received that the files are ready for inspection and any manual metadata tuning, the Archivist goes in and adds/edits basic metadata. Our metadata schema is based on Dublin Core. Over time it will get more complex. At this stage, it is quite critical to get digital objects under management, and especially to have information about rights, permissions, etc., at our fingertips. The interfaces to Fedora were created using Ruby on Rails, and the code generalized from our project is available from the MediaShelf via RubyForge. It is called “RubyFedora/ActiveFedora”: http://projects.mediashelf.us/projects/show/active-fedora .

Fig 7: Editing metadata using “ActiveFedora.”

What We Learned and Next Steps

First, we learned that we were right. It is possible to build a useful, lightweight repository based on Fedora Commons code for relatively little money. Because we did it using open source tools, the next group will be able to do the same for even less - conceivably, for anyone with our ideas of a good UI and our single content model, a useful repository can be had just by downloading and installing RubyFedora/ActiveFedora.

AWS has been such a comfortable development environment that we are likely to move our regular Web site, legacy Oracle, JSP, static HTML, Drupal, and more to AWS. We estimate that we will cut our monthly expenses even as we continue to add new services. When we started, AWS supported only Linux, and had some limits on what database services could be supported. That is still enough for us, but it should be noted that AWS now supports a wider variety of services, supports Windows OS, and even boasts its own database services. For now, we intend to stick with MySQL, and hope to migrate the Oracle data in the next two years.

As one aspect of the project, we have a new search engine, the open source Lucene/SOLR package installed. It will take another project, however, before this is usefully customized for our Web site. Just as Fedora doesn’t do much useful “out of the box,” configuring a search engine so that it provides useful results to site visitors is non-trivial.

Without Drupal connected to Fedora we have our simple, inexpensive Digital Asset Management (DAM) and Digital Preservation services, but we have not yet put those assets in the hands of our archive’s users: students, educators, and researchers. For that purpose we are building on work done by a second partner, the University of Prince Edward Island (UPEI), which has developed a module tying Drupal to Fedora. This spring they are updating the module to work with current Fedora and Drupal releases. If all goes well, we will undergo the necessary Drupal updgrade (to version 6) as well.

Finally, to tie everything together, we are building a tool using the brand new OAI-ORE standard which will help audiences find items in our collection (or other collections), and build the Web equivalent of annotated multi-media slide shows. These collections are saved and shared using ORE, a simple, newsfeed-like file, making it possible for us to offer flexibility and service unique on the Web.

References

Amazon Web Services. (http://aws.amazon.com). Retrieved January 31, 2009.

Drupal.org | Community Plumbing. (http://drupal.org). Retrieved January 31, 2009.

Fedora Commons. (http://www.fedora-commons.org/). Retrieved January 31, 2009.

Islandora 2008. “Islandora Fedora-Drupal module” Blog post: Sep 26, 2008, University of Prince Edward Island Robertson Library. (http://vre.upei.ca/dev/islandora). Retrieved January 31, 2009.

Morris, Carol Minton. “DSpace Foundation and Fedora Commons Receive Grant from the Mellon Foundation for DuraSpace”. HatCheck newsletter, Nov 11, 2008. (http://expertvoices.nsdl.org/hatcheck/2008/11/11/dspace-foundation-and-fedora-commons-receive-grant-from-the-mellon-foundation-for-duraspace/). Retrieved February 25, 2009.

NLNZ 2003. National Library of New Zealand.“Metadata Extraction Tool”. (http://www.natlib.govt.nz/services/get-advice/digital-libraries/metadata-extraction-tool), created 2003. Retrieved January 31, 2009.

RubyFedora/ActiveFedora (the Ruby and Fedora code developed for this project). (http://projects.mediashelf.us/projects/show/active-fedora/). Retrieved January 31, 2009.

Zumwalt, Matt. “Preview of ActiveFedora DSL”. Blog post: Oct 6, 2008. The Media Shelf. (http://yourmediashelf.com/blog/2008/10/06/preview-of-activefedora-dsl/). Retrieved January 31, 2009.