![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Archives & Museum Informatics

158 Lee Avenue

Toronto, Ontario

M4E 2P3 Canada

info @ archimuse.com

www.archimuse.com

| |

Search A&MI |

Join

our

Mailing List.

Privacy.

published: April, 2002

Networked Multi-sensory Experiences: Beyond Browsers on the Web and in the Museum

Fabian Wagmister and Jeff Burke, HyperMedia Studio, School of Theater, Film and Television, University of California, Los Angeles, USA

Abstract

The defining characteristic of the digital era is the potential that it brings for “real-time” interconnection between anything that can be measured, expressed, or controlled digitally. The World Wide Web stems from one type of digital interconnection: well-defined standards linking a “browser” with remote machines presenting information to be browsed. Yet digital technology enables more than just new approaches to presentation, browsing, and searching. It can create dynamic connections between different physical spaces and across sensory boundaries, and provide experiential interfaces for interaction that move beyond the mouse, keyboard, and screen. It can relate the physical space of the museum to the virtual space of the Web for both individual and group experiences.

Using our past media-rich installation and performance work as a reference point, this paper will present a vision of digital technology for the museum as a dynamic connection-making tool that defines new genres and enables new experiences of existing works. The authors’ recent works include the interactive media-rich installations Time&Time Again… (with Lynn Hershman, commissioned for the Wilhelm Lehmbruck Museum, Germany), Invocation & Interference (premiered at the Festival International d’Arts Multimédia Urbains, France), Behind the Bars (premiered at the Central American Film and Video Festival, Nicaragua), and …two, three, many Guevaras (commissioned for the Fowler Museum of Cultural History, Los Angeles), as well as the recent UCLA performance collaborations Fahrenheit 451, Macbett, and The Iliad Project.

Keywords: interactive, digital technology, digital media, performance, aesthetics

Introduction

Beyond browsing—beyond the “point and click” of mice, keyboards, and tablets—digital technology gives us the capability to make connections between people’s actions, media, and physical and virtual spaces. These connections can surround and provide context for art or create it. They encourage engagement beyond basic navigation of traditionally hermetic “delivery” structures for video, audio, text, and other media. Here, we describe in detail a few media-rich interactive installations and performances developed at the HyperMedia Studio, a digital media research unit in the UCLA School of Theater, Film and Television. A set of “core technologies” that enable this work is then listed briefly. With both the creative work and technology as a backdrop, we propose components of a “digital aesthetic” that unify this work and provide a research focus for our own experimentation. Along the way, we discuss the possible extensions of this approach to museum exhibit design.

Selected Media Installation and Performance Work

...two, three, many Guevaras (1997)

by Fabian Wagmister

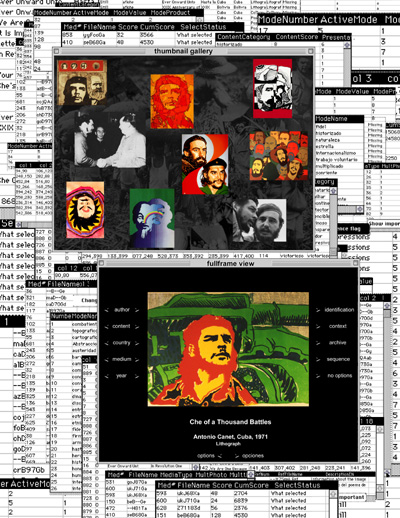

An exploratory database installation commissioned by the Fowler Museum of Cultural History, …two, three, many Guevaras undertakes the challenge of analyzing the message and relevance of Latin American revolutionary Ernesto “Che” Guevara through the artworks he inspired. This constellation includes paintings, engravings, murals, posters, sculptures, poems, songs, and every other imaginable form of artistic representation. These works, originating in over thirty-three countries, amount to thousands of constituent media units. Multimedia relational database technology and large touch-sensitive displays are used to present a media-rich exploratory experience guided by an aleatory interpretative engine.

The participants are able to navigate the many creative articulations about Che and explore the complex weave of interconnections among them. The aesthetic, conceptual, historical and contextual forces informing these art works are embedded into a complex interactive navigational structure through the implementation of an adaptive search process. Rather than using standard “hyperlinks” with a single destination, the piece presents a thumbnail selection of media elements drawn from the database at random, according to a probability distribution determined by a set of interpretative modes and relevance rankings. (See Figure 1.)

Fig. 1: Selected views from …two, three, many Guevaras.

These categories and weightings determine the probability that an image will appear as a thumbnail along with the one selected by the participant. This system is discussed in more detail in (Wagmister, 2000).

The participant’s choices affect the weightings of each media unit, so that navigation of the piece slowly blends the author’s original rankings with those generated by the participant’s connection-making choices. As a result, the media selections and combinations have been significantly different at the piece’s different showings; for example, in Los Angeles and Cuba. By involving the participants in a part of the creative process, the piece reflects back the viewing context in its own navigational structure.

Time&Time Again… (1999)

by Lynn Hershman and Fabian Wagmister

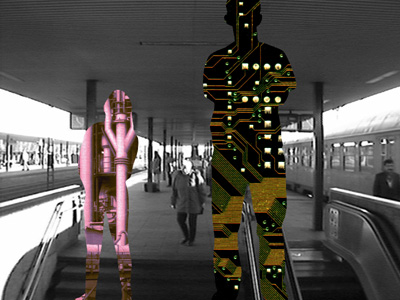

Time&Time Again… extends media navigation to a site-specific context with both Web- and body-based interfaces. A distributed interactive installation, the piece was commissioned by the Wilhelm Lehmbruck Museum in Duisburg, Germany, as part of its Connected Cities exhibit. It explores the complex relationships between our increasingly interlinked bodies and machines, and the resulting techno-cultural identity.

The installation places museum visitors and Internet viewers in a complex web of engineered interdependencies with each other and with the facilitating apparatus. At the museum, a large screen presents participants with live video images originating in train stations, coal mines, and steel mills. These locations are the nodes in a network of industrial connectivity in the Ruhr region of Germany, an infrastructure being challenged and superseded by the new network, as the industries that once defined the region become less and less cost-effective. As participants examine these projections, transfigured silhouettes of their own bodies are superimposed onto the external video signals, resulting in a composite image integrating the remote and museum elements. As each silhouette mirrors the movements of the corresponding participant, it does not reflect the details of the individual's body but instead functions as a window to a hidden scheme of technology. (See Figure 2.)

Fig. 2: Sample image from Time&Time Again… showing museum visitors’ silhouettes filled with images of technology and superimposed on remote video. This display is output to a large rear projection screen in the museum space.

By moving toward or away from the screen, participants control the perspective of technology presented within their bodies. As in …two, three, many Guevaras, a large database of media is used, though it has a fixed navigation scheme in which the probability of an image being chosen for a certain layer is uniformly distributed. At one end of the installation space, away from the screen, “macro” technologies such as aerial shots of transportation, communications, or industrial structures from the Ruhr region are selected; at the opposite end, closest to the screen, images show micro perspectives such as circuits, gears, and computer boards.

A robotic, telematic doll is a synthetic witness and wired voyeur in the museum space. Simultaneously a human look-alike and a machine wannabe, its child-like form implies an alien perspective. From a corner of the installation space this “main character” of the piece observes participants’ interactions with the environment and speculates on the nature of the symbiosis between humans and technology. Cameras behind its eyes stream live to the Web where remote participants at once watch and become part of the feedback system of the piece. The doll facilitates Web and museum participants' awareness of the two interconnected spaces. In addition to remote viewing, visitors to the piece’s Web site can select which remote camera is displayed at the museum, control telematically the pan and zoom of the robotic doll's vision, and record video fragments into a navigable database history.

All of the dynamic media elements used in the environment are produced and combined on the fly and are not automatically recorded; each moment of the process is aleatory and ephemeral. The history database allows only Web viewers to choose to record, store, and characterize segments of the doll's vision stream, collectively creating the piece’s memory.

As suggested by Eco (1989), the piece is open: it is completed, both conceptually and experientially, by the simultaneous action of both Web and museum visitors. It is only through the museum participants' electronically transformed silhouettes and their movement that the Web users can view the deeper layers of technology. And it is only through Web participants' actions that the images from the remote camera sites are switched and made accessible to people at the museum installation.

Invocation and Interference (2000)

by Fabian Wagmister

The museum experience of Time&Time Again… is driven by a sensual connection between participants and media, while the reach of human communication through technology is addressed in the online experience of the piece. These themes are revisited in a different arena in Invocation and Interference (INx2), which was first shown at “Interferences: International Festival of Electronic Art” in Belfort, France. It begins with the idea that to communicate beyond bodily reach, prevailing over the limitations of time and space, remains a constant human desire. At the personal level this need gives rise to innumerable cultural practices that regularly overlap and collide, producing unexpected readings and relational interpretations. INx2 explores this phenomenon as experienced from a car traveling in the Argentine pampas. As one travels the immense distances of this region, two modes of very intimate communication collide in public articulation. On the one hand, the traveler encounters countless small religious shrines on the side of the road. These shrines, located in the middle of nowhere, represent promises, rememberings, gratitudes, requests to powers beyond the physical. Each shrine articulates a personal vision of popular faith and a transferring of the most intimate to the most public. At the same time, on the regional radio stations announcers regularly read personal messages destined to those who live and work in the countryside away from the reach of the telephone. These messages cover a broad set of communicational priorities, from the mundane to the tragic. For an anonymous and casual traveler, the intersection of these two communicational modes represents a significant interpretative experience.

Upon entering INx2, participants see a group of monitors of different sizes positioned on pedestals of different heights, as shown in Figure 3.

Fig. 3: The initial condition of Invocation and Interference.

From the distance, it is clear that they reveal sections of a composite video image: the landscape of the pampas as seen from a traveling car. The sound of wind and the tuning of a car radio searching for a station is heard. Upon moving towards a particular monitor to the point where it dominates the visual field, the viewer is presented with a video view of a road shrine from a static camera position. This footage is selected at random from a large database collection. The sound of radio messages plays from that monitor at low volume, forcing the viewers to get closer to hear clearly. As the viewers move closer there is a realization that bodies control a zoom effect over the video image, allowing more careful examination of the shrine. Ultimately as the sound becomes clear, the extreme level of zoom causes the images to become distorted. Each of the monitors functions independently, allowing multiple participants to navigate the piece simultaneously. Those monitors with no person nearby continue to show the passing landscape. From a distance, the group of monitors with participants in INx2 becomes a real time dynamic collage of the superposition of a number of forces: the driving through the pampas, the shrines of faith, the radio station’s personal messages, and the interaction of the local participants.

The participants in INx2, by moving their bodies to explore the piece, create a sort of collective choreography with the video images for those watching from behind. David Saltz (1997) has argued that all computer-based participatory experiences are inherently performative. The role of the user’s presence in the system of an interactive piece takes on an element of performance as the participant does what is necessary to explore it. In another area of our research, we investigate the implications of digital technology for traditional performance work and for pieces like Hamletmachine, that are part exhibition, part installation, and part performance.

Hamletmachine (2000)

Installation by Jeff Burke, based on the play by Heiner Müller

In this piece, an original audio performance of German playwright Heiner Müller’s Hamletmachine is divided into segments and stored in fifteen pieces using straightforward digital editing. In an installation space, first created for the Fusion 2000 conference in Los Angeles, every shard is played back simultaneously, its continual loop in time unaffected by the movements of its visitors or the state of any other sound fragment. Several bright lamps at one end of the space cast their light on a long strip of sensors at waist height on the opposing wall. A visitor, who is by action or inaction part of the performance, reveals any or all of these dialogue fragments by casting a shadow on the sensors along the wall, as seen in Figure 4.

Fig. 4: A visitor to Hamletmachine experiments with the relationship between his body, shadow, and the piece’s dialogue.

A simple relationship is constructed by a computer hidden from view: the less light on a sensor, the louder its dialogue fragment is played through the speakers in the space. The darkness of the shadow on a particular sensor controls the volume of its line at that moment without affecting the delivery itself; each sensor contains and reveals its segment of dialogue without quite allowing complete control. The different lengths of each loop ensure that the piece will almost never be the same twice.

Müller’s play itself is Hamlet—the play, character, and the actor—ripped apart with German history and performed in pieces. This particular work further fragments it and presents the fragments simultaneously, with no one line or time privileged over another except by choice of the observer-participant. In some ways, it attempts what Jonathan Kalb describes about Robert Wilson’s production of the same piece:

The text, in other words, was simultaneously obliterated

and preserved as a monument—like the images in it of Stalin, Mao,

Lenin, Marx, and like Hamlet, the Hamlet Actor and his drama.

(Kalb 1998)

The text is both sheltered and shattered by the perfect preservation and repetition possible with digital technology, while its complementary capability for dynamic manipulation of media allows each experience to be a different collage of sound and meaning. Standing close to the strip of sensors and far from the lights, one observer-performer can only reveal a few shards of dialogue at a time, but the shadows are deep and therefore the volume of each segment is quite loud. A person standing closer to the light casts a wide shadow across many sensors, revealing all fifteen fragments at once—a cacophony as if the entire play is being performed simultaneously. In between the extremes, ducking below the sensors and extending their hands into the space, or working with another person, the observer-participants can explore many other variations of the same text.

Macbett (2001)

Directed by Adam Shive, Interactive Systems by Jeff Burke

Seeing an actor familiar with Hamletmachine work within the space to create a unique type of performance encouraged us to continue experimenting with “interactive” technology on stage, translating the experience of developing systems for media-rich installations to performance. The recent production of Eugene Ionesco’s Macbett at the UCLA Department of Theater was the department’s first performance to incorporate “interactive systems” that allowed lighting and sound to adapt automatically to performer position and movement. Macbett was produced in the process typical of large shows at UCLA. It was directed and designed by graduate students, advised by faculty, managed by department staff, with undergraduate students forming the cast and crew. Like other efforts at the HyperMedia Studio, it also involved the collaboration of students from computer science and electrical engineering, who helped to develop the technical systems concurrently with the production process (Bfonturke, 2001).

In Macbett, we concentrated on the development of a toolset for defining relationships between performer action and media on stage, specifically the lighting and sound of the performance. The system worked in concert with the production’s normal crew and was not designed to replace them, but instead to augment the designers’ palettes with “adaptable” media components. A wireless performer tracking system was used to monitor a total of five performers and a few props used by the characters (see Figure 5).

Fig. 5: In Macbett, wireless position trackers were concealed on actors and embedded in props like this witch staff.

We developed a set of software tools that allowed large-scale theatrical lighting and sound design to adapt to actor position and movement. Six computers communicating over an ethernet network performed a variety of tasks, providing graphical interfaces, controlling lighting and sound, and “interpreting” position information to make inferences about how performers moved instead of just where they were.

Though a variety of performer-driven cues were created, the most interesting were those that did not just make complex sequences more responsive to performers, but actually showed promise of affecting the process of creating theater. For example, the primary agents of the supernatural in the play—the two witches, who also appear as Lady Macbett and her Lady-in-Waiting—were each to have their own control over the stage environment through their staffs. The first conjured thunder and lighting by raising the staff quickly in the air—the quicker and stronger the thrust, the more powerful the lightning strike—while the second witch swirled her staff to create ripples of darkness, color shifts, and the sound of whirling wind proportional to the speed of her staff. These relationships were activated at the beginning of each scene where the witches appeared and lay “dormant” until the proper action was taken, allowing the actresses to conjure them up at any point. These cues required the performers to be aware of their new capabilities on stage and to work with the director to explore how they could be most effectively used.

The Iliad Project (2002)

Performance architecture by Jeff Burke, Adam Shive, Jared Stein

Macbett was a traditional play that was quite dependent on theatrical convention. From our experience with this existing text and the traditional production process arose the desire to explore the simultaneous evolution of text, design, performance technique, and technology, rather than “attaching” or “designing in” technology to another production. This change in process brings us full circle back to the concepts and technologies found in the interactive installation work discussed initially and blends them into a performance experience. The Iliad Project aims to develop an architecture for an original performance work that draws its themes from Homer’s Iliad and its context from the city where it is performed.

This new work is being constructed as a process that the audience intersects and influences, not simply a single, repeated performance that uses new technology. It will merge an on-line exploration of the world of the piece with a combination of interactive galleries and performance spaces. Through careful integration of a database of audience information, sensing and image capture technology, and dynamically processed media, the piece will engage the audience-participants by modifying its own text and design elements based on the groups of people who visit the Web site and attend a particular performance.

The Iliad Project’s primary technological focus is audience interaction and implication through the dynamic customization of media. Where necessary, it will also incorporate dynamic control of the production environment based on performer action, as developed for Macbett.

Core Technologies

It is challenging to list all of the technologies that are redefining storytelling, the experience of artwork, and everyday life, though Stephen Wilson makes an amazing attempt in his new text (Wilson, 2002). Yet in our work we can find a set of “core technologies” that enable and inspire the creative projects listed above. Most of our technological interests run parallel with “ubiquitous” and “pervasive” computing research. This field includes the development of unobtrusive sensing technologies, wireless networks, and continued miniaturization of input/output interfaces. Mark Weiser’s seminal 1991 article “The Computer for the 21st Century” envisioned the home and office computing systems of the future that are becoming a reality today through this work (Weiser, 1991). An excellent survey of recent ubiquitous computing research can be found in (Abowd, 2000). Information on these technologies fills many books, journals, and Web sites. They are mentioned briefly here to provide a technological background for the creative work described above.

Instrumented objects and environments

Emerging sensing technologies enables new experiences for the museum, theme park, educational space, theater, and the home. Sensors provide the entry point into the loop of communication between humans and digital systems. They can measure touch, temperature, proximity, vibration, mechanical movement, and many other physical quantities. Their intrinsic capabilities, as well as our capability to process and interpret the data they provide, will shape our environments in the future. Individual devices are becoming smaller, cheaper, and more accurate; they are starting to shed their wires and could become as ubiquitous as dust (Kahn, 1999). Rapid, accurate localization (tracking) of a large number of wireless sensors appears to be feasible in the near future (Savvides, 2001). Simultaneously, our ability to use cameras and microphones as sources of information for digital systems is continually increasing, offering possibilities for real-time control through completely remote sensors (Favaro, 2000; Wren, 1997). As these technologies mature, the need for the keyboard, mouse, and tablet will fade; this will occur most rapidly in environments emphasizing content engagement over “productivity.” In our creative work, which deals with the former, we have used sensors ranging from the simple (photocells in Time&Time Again… and Hamletmachine) to the sophisticated (ultrasonic tracking in the Macbett positioning system).

In addition to new sensors still under development, the commercial sensing and factory automation industry manufactures a large variety of reliable, networked systems for acquisition of sensor data. We moved early on to using these types of systems for our “standard” sensing needs because of their reliability and the wide range of products available. The Tech Museum of Innovation in San Jose has used similar systems on a larger scale with much success (Ing, 1999).

Dynamic media control

In the works described above, sensing technologies are combined with digitally controlled media that include audio, video, and still images as well as lighting, motor control, and environmental effects. Digital control allows media to be the outlet for relationships connecting the physical world—as measured by sensors—with the digital world of databases, networks, and machine intelligence. The high bandwidth and large storage requirements of digital media are becoming less costly as the technology continues to develop, pushed along by the consumer entertainment market.

For now, our focus remains primarily on media that is not computer-generated: live and recorded video, audio, and still images. This focus reflects the strengths of our School of Theater, Film and Television and our desire for maximum engagement with minimum system complexity. (However, we have begun exploring 3D modeling for pre-production and sensor data visualization.) To implement flexible media delivery, we have used a number of media control technologies also found in museum systems. For example, multi-channel MPEG-2 hardware decoder cards have become our standard method of delivering many channels of high quality full frame rate and full resolution video from a single workstation. For lighting control, we have developed custom software to control industry-standard DMX lighting systems using off-the-shelf controller hardware. For sound management, we have used Cycling 74’s popular Max/MSP software package running on commodity Macintosh computers. (Burke, 2001)

Databases

Digital technology’s capability for “real-time” connection-making is complemented by its ability to store and query massive amounts of information. In exhibitions, performances, and media installations, the database may be used as a repository for contextual and background information. This is familiar to anyone who has designed a large database-driven Web site. However, within an artwork or interactive experience, the database also can be considered conceptually as a work’s memory, leading artists and designers to develop interesting uses of databases without worrying at first about technological specifics. Databases can store historical events in a piece or exhibit (as with the Web history module in Time&Time Again…), remember a user’s media navigation to reflect the cultural context of the viewing public (…two, three, many Guevaras), or link the observer-participant’s experience across a variety of physical and virtual spaces (The Iliad Project). Coupled with natural language processing and other artificial intelligence techniques, databases provide a key component of interactive systems that move beyond simple “one-to-one” relationships between sensor inputs and media outputs.

Distributed glue

One of the unique qualities of the digital arena is the ease with which connections can be made between components, including sensors, media controllers, and databases. Because the components (or their controllers) share a common digital representation of information, they are ultimately separated only by conventions and protocols. When these can be bridged, digital technology allows artists to set up systems of relationships between the physical world (as it can be measured by technology), digitally controlled elements of the experience, and purely “virtual” components. Relationships might be direct (Macbett), adaptive (…two, three, many Guevaras), and/or emergent. New telecommunications networks even allow these relationships to exist almost transparently between geographically distributed components.

However, for many working with “interactive experiences” the difficulty lies in creating that initial bridge across conventions and protocols. Experimentation with connection-making is often limited by the software available and not the sensors for input or display technologies for output. Relationships between viewer-participant action and interactive work are enabled by software systems that connect or “glue together” different components of the interactive system. Our past works have used custom software developed in a variety of programming languages and authoring environments: Macromedia Director, Visual Basic, Max/MSP, and C/C++. Within the boundaries of each work, we have created flexible systems that allow experience parameters to be changed rapidly during the development and testing process. In Macbett, for example, a simple lighting control language was developed to allow authoring of “dynamic cues” by non-programmers. INx2 featured a configuration system to define different relationships between participant proximity and the media elements associated with each pedestal.

Though these were fairly flexible in their specific application, each system used a slightly different approach. To facilitate future works and encourage experimentation, we are developing a control system and associated scripting language based on our experience in creating interactive works. The two are designed to provide a consistent way for non-programmers to script interactive relationships across media boundaries, allowing databases to affect stage lighting, sensors to control video playback, participant proximity to vary sound playback, and so on. We believe the approach will have applications outside of performance, in single or multi-user interactive experiences. More information on this control system will be published in (Mendelowitz, 2002).

Once a digital bridge exists across protocols and conventions, another challenge arises: How can we develop more sophisticated relationships between these interconnected elements? Initial versions of this control system will support easily expressed relationships, but the architecture will allow the addition of fuzzy controllers, machine learning and adaptation capabilities (Marti, 1999; Not, 2000), and perhaps even experimentation with autonomous actors, as already developed by Sparacino (2000) and others.

Aesthetic framework

In cinema, there is the film stock, the moving and projected image, the camera lens, and the fixed relationship between audience and screen. Theater retains the ritual of performance: someone watches, someone performs. Despite all of the arguments about what is theatrical or cinematic, the materials of the art form define a domain of parameters for artists: the “material specificity” of the medium. Though tested, pushed, and broken repeatedly by the avant garde, this domain still provides a common ground for understanding and analysis of individual works and media forms. After the choice is made to make “a film,” there are unavoidable specifics of the medium, each with certain affordances: aspect ratio, grain, sprocket holes, a collection of still images moving quickly to generate the perception of motion, and so on.

Is there a digital analogy to material specificity, a framework from which to understand its uniqueness as an arena for creation? Certainly, “the digital” is, at its most basic, an abstract mathematical world. But modern engineering has generated digital devices, systems, theories, and approaches that create an arena with particular affordances which are defined by its material and virtual specifics. Our work has led us to suggest a digital aesthetic of context, presence, and process, enabled by digital technology’s capability to define relationships across modal and geographic boundaries. In light of the works and technology discussed above, we develop these ideas briefly and begin to extend our discussion beyond works in museums to museum experiences as a whole.

Context

[T]here is something critically useful about electronic

art, even if it does not always recognize this itself. Electronic artists

negotiate between the dead hand of traditional, institutionalized aesthetic

discourses and the organic, emergent forms of social communication. Electronic

art is an experimental laboratory, not so much for new technology as for

new social relations of communication.

- McKenzie Wark (1995)

In interactive works and exhibit design, providing “context” usually implies delivering a breadth of navigable content supporting a work or collection. Digital technology is often used to provide a world of contextual information by combining the storage efficiency of databases with dynamic and/or adaptive navigation schemes. An attractive feature for informational exhibits (as well as artworks) is that it has become cost-effective, at least in terms of equipment, to provide engaging, personalized access to a large amount of content.

However, digital technology encourages the exploration of a second, more individual type of context. The digital presents the opportunity to author not just contextual content in the traditional sense, but contextual systems and interfaces that illustrate relationships between components. The author can create a system interconnecting actual components (or their metaphorical equivalents), and that system reflects, subverts, and comments upon relationships within the piece’s subject.

We have the opportunity to explore relationships with processes that, before the digital, were impossible to create because of a chasm of modality or geographic distance. Networks extend digital systems beyond the physical boundaries of the museum space, drawing in contextual information from virtual spaces (like the Web) and from different physical spaces through remote sensors. This moves beyond navigation of prebuilt contextual content to systems that place the museum, performance, or installation space squarely within the real world and its social and natural ecosystems.

For example, by the use of sensors, networks, and media control, the traffic on Santa Monica’s 405 freeway might affect the flow of media through an installation space at the J. Paul Getty Museum that overlooks it. In another place, the number of people in a public square, measured against its historical average, could control the volume of a video news broadcast in an interior space. In that space, a counter ticks off the difference like a stock quote. Would CNN’s coverage on September 11, 2001 exist in silence or blaring sound? How would this change if that video were also projected in the public space? If it were projected in a public space in another country? What relationships might be revealed by exploring the “memory” of such a system? By experiencing it in real time?

In Time&Time Again…, real-time context is delivered by live cameras streaming into the museum space from throughout the local region, juxtaposed with similarly “live” actions of the on-line participants. In The Iliad Project, which might incorporate the two scenarios in the previous paragraph, cameras and sensors in the city where the piece is performed bring live data into the space, where it is recontextualized by the event’s narrative structure.

Presence

Even the most perfect reproduction of a work of art

is lacking in one element: its presence in time and space, its unique

existence at the place where it happens to be. This unique existence of

the work of art determined the history to which it was subject throughout

the time of its existence... The presence of the original is the prerequisite

to the concept of authenticity... The authenticity of a thing is the essence

of all that is transmissible from its beginning, ranging from its substantive

duration to its testimony to the history which it has experienced.

- Walter Benjamin (1935)

Digital artists have often fought the perception that their media are somehow synonymous with “perfection” by creating complex or idiosyncratic systems that are difficult to reproduce. In some ways, they are seeking to develop systems with the “aura” that Benjamin (1935) suggests is lacking in mechanically reproduced art. We posit that a unique type of aura is perceived when an interactive system relies on the participant’s unique presence with the work in space and time. As someone exploring an experience, my body is implicated when it enables and influences the work itself. When my action, measured by sensors and affecting the environment or memory of a piece, is clearly important to that work in some way, my “performance” with the piece creates a unique sense of authenticity of experience. In stark contrast to the typical relationship between viewer and media, digital technology enables the user to influence, adapt, and explore what had previously been mechanically reproduced independent of their presence and action: the televisual and cinematic image, the media and mechanisms within a theatrical performance, the museum audio guide that plays the same audio even if the wearer is in front of the wrong work.

Moving beyond typical computer interfaces also extends today’s experience of the Web. A Web site, as customized or personalized as it can become, does not (for now) care about the user’s gaze or posture or presence in space. This isn’t an intrinsic quality of the Web, but of the interfaces that have become its typical mode of navigation: the mouse and keyboard. Ubiquitous computing pushes us towards alternate interfaces of gesture, spoken word, position, body language, eye contact. Unlike the keyboard and the screen, these interfaces require the conversational attention of our bodies. As they change our interaction with the computer, these interfaces create difficulties for spaces and experiences built around traditional audience / art boundaries. They force us to interact with a performance, exhibit, or installation—perhaps now an art system instead of an object—as we would with other people, by making noise and moving around. What is exciting in the home and in educational spaces: experiential rather than observational participation; seems threatening to the traditional experience of museums, cinema, and theater.

For years, children’s and science museums have had an advantage over art museums in this regard because they construct hands-on exhibits that allow visitors to touch or manipulate objects. (Schwarzer, 2001)

In the rush to use digital technology for unique personal experiences, keeping the art museum quiet, perhaps we miss new possibilities for social interaction while experiencing art.

The wearable computer and wireless Personal Digital Assistant (PDA) are a focus of attention for many museums because they maintain a “noiseless,” personal experience, much like the audio guide, while providing many of the advantages of digital media. Such devices provide the annotated and enriched experiences described by Schiele (2001) and Spohrer (1999). Schwarzer (2001) points out that this technology can also be used to engage viewers in stories about the history and context of artworks. Yet no matter how dynamic the navigation between (or even inside) the stories, this does not necessarily escape a traditional observational model. The PDA ties up the hands, fixating the body on observing and manipulating its interface instead of the artwork. Without care, the aesthetic of presence may be lost in the aesthetics of point-and-click and handwriting recognition.

[Hypermedia] allows them to choose their own paths through

the work. But it does not cast viewers as participants within the

work itself simply by virtue of employing a hypermedia interface.”

(Saltz, 1997)

Can we use sensing technologies in combination with wearable computers or PDAs to immediately cast the observer as participant in these stories or in the works themselves? Can we make their unique presence an important part of the experience?

Process

We have, therefore, seen that (1) ‘open’ works,

insofar as they are in movement, are characterized by the invitation

to make the work together with the author and that (2) on a wider

level (as a subgenus in the species ‘work in movement’)

there exist works, which though organically completed, are ‘open’

to a continuous generation of internal relations that the addressee must

uncover and select in his act of perceiving the totality of incoming stimuli.

– Umberto Eco (1989)

This casting of the observer as an actor / co-author / participant in digitally mediated experience requires the author’s willing opening up to the “risk” of what is deemed important being superseded by what is brought in by the piece’s users. It forces a different role for the author and curator. In addition to creating traditional “content,” the author must define relationships that connect participant’s presence and context. With this comes an emphasis not on a final product or an intrinsically complete work, but on open processes and systems into which all of these components: action as discovered by sensors, media content created previously or recorded live, and navigation systems that adapt a narrative or thematic structure to a particular user.

Pieces like …two, three, many Guevaras and Time&Time Again... illustrate that the inclusion of users in the process of adaptation requires a different kind of authoring, but not a relinquishment of the author’s voice or theme. In Macbett, we “risked” giving direct influence over the design to the actor, breaking apart the power relationships already discarded in the poor theatre but difficult to remove in mediatized performance settings. In return, we discovered that rich media experiences are possible without requiring mechanical accuracy from the actors. In The Iliad Project, we take a further step, constructing the experience of the collective audience so that it depends on their individual responses and action. We cast the uninitiated in a role that is not quite performer, but more than passive audience member or extra. In installations, performances, and museum exhibits, digital technology can enable participation in a process, not just navigation of an existing, hermetic collection or exhibit.

Conclusion

To performance, installation, and exhibit design, digital technology brings the same creative challenges: context rather than isolation, presence instead of disembodiment, and process over product. Each element of the digital aesthetic suggested here is probably more difficult to implement than its alternative. But together they relate our impression of the unique nature of “the digital” that cuts across a wide range of technologies and systems. Surprisingly, we find digital technology, viewed in this light, encourages a tendency towards imperfection, unpredictability, and openness that, in return, can bring deeper audience engagement and explore new social experiences of art.

Acknowledgements

…two, three, many Guevaras by Fabian Wagmister in collaboration with David Kunzle, Roberto Chile, Daniel Deutsch, Fremez, and Dara Gelof. Time&Time Again… by Lynn Hershman and Fabian Wagmister. Team: Joel Schonbrunn, Lior Saar, Dara Gelof, Jeff Burke, Palle Henckel, Lisa Diener, Silke Albrecht. Invocation and Interference by Fabian Wagmister. Installation production by Dara Gelof and Jeff Burke. Hamletmachine installation by Jeff Burke based on text by Heiner Müller. Dialogue – Adam Shive, Meg Ferrell. Eugene Ionesco’s Macbett directed by Adam Shive. Scenery – Maiko Nezu; Costumes – Ivan Marquez; Lighting – David Miller; Sound – David Beaudry; Interactivity – Jeff Burke and the HyperMedia Studio; Dramaturg – Sergio Costola; Stage manager – Michelle Magaldi; Production manager – Jeff Wachtel. The Iliad Project – architecture by Jeff Burke, Adam Shive, Jared Stein. Advisors – Fabian Wagmister, Jose Luis Valenzuela, Edit Villareal; Developers – Eitan Mendelowitz, Joseph Kim, Patricia Lee; Web developers – Caroline Ekk, Laura Hernandez Andrade, Stephan Szpak-Fleet; Production coordinator – D.J. Gugenheim.

References

Abowd, G., Mynatt, D. (2000). Charting past, present, and future research in ubiquitous computing. ACM Trans. on Computer Human Interaction 7, 29-58.

Benjamin, W. (1968). The Work of Art in the Age of Mechanical Reproduction. In W. Benjamin & H. Arendt (Eds.) Illuminations. New York: Harcourt Brace Jovanovich, 217-251.

Burke, J. (2002). Dynamic performance spaces for theatre production. Theatre Design & Technology 38.

Eco, U. (1989). The Open Work. Cambridge, Massachusetts: Harvard University Press.

Favaro, P., Jin, H., Soatto, S. (2000) Beyond point features: Integrating photometry and geometry in space and time: A semi-direct approach to structure from motion. Tech. Report CSD-200034, UCLA Department of Computer Science.

Ing., D. S. L. (1999) Innovations in a technology museum. IEEE Micro 19:6, 44-52.

Kahn, J. M., , R. H. Katz and K. S. J. Pister. (1999) Mobile Networking for Smart Dust. ACM/IEEE Intl. Conf. on Mobile Computing and Networking (MobiCom 99), Seattle, WA, August 17-19.

Marti, P., Rizzo, A. Petroni, L, Tozzi, G., Diligenti, M. (1999). Adapting the museum: a non-intrusive user modeling approach. Proc. of the 7th Intl. Conf. on User Modeling, Banff, Canada, 20-24 June 1999. Wien, Austria: Springer, 311-313.

Mendelowitz, E., Burke, J. (2002) A distributed control system and scripting language for 'interactivity' in live performance." To be presented at the First International Workshop on Entertainment Computing, Makuhari, Japan.

Not, E. and Zancanaro, M. (2000). The MacroNode Approach: Mediating between adaptive and dynamic hypermedia. Proc. of Intl. Conf. on Adaptive Hypermedia and Adaptive Web-based Systesms, Trento, Italy, 28-30 Aug. 2000. Berlin, Germany: Springer-Verlag, 167-178.

Saltz, D. Z. (1997). The Art of Interaction: Interactivity, Performativity, and Computers. J. Aesthetics and Art Criticism 55, 117-127.

Savvides, A., Han, C. and Srivastava, M. (2001). Dynamic fine-grained localization of ad-hoc networks of sensors. Proc. of 7th ACM/IEEE Intl. Conf. on Mobile Computing and Networks (MobiCom’01). Rome: ACM. 166-179.

Schiele, B., Jebara, T., and Oliver, N. (2001) Sensory-augmented computing: Wearing the museum’s guide. IEEE Micro 21:3, 44-52.

Schwarzer, M. (2001) Art & Gadgetry: The Future of the Museum Visit. Museum News July/August 2001. Accessed via http://www.cimi.org/whitesite/Handscape_Gadgets_Schwartze.htm.

Sparacino, F., Davenport, G. and Pentland, A. (2000). Media in performance: Interactive spaces for dance, theater, circus, and museum exhibits. IBM Systems Journal 39, 479-510.

Spohrer, J. (1999) Information in Places. IBM Systems Journal 38:4, 602-628.

Wagmister, F. (2000). Modular Visions: Referents, Context, and Strategies for Database Open Media Works. AI & Society 14, 230-242.

Wark, M. (1995). Suck on this, planet of noise! In S. Penny (Ed.), Critical Issues in Electronic Media Albany, New York: State University of New York Press.

Weiser, M. The Computer for the 21st Century. Scientific American 265, 3, 94-104.

Wilson, S. (2002) Information Arts. Cambridge, Massachusetts: MIT Press.

Wren, C., Azarbayejani, A., Darrell, T. and Pentland, A. (1997). Pfinder: Real-Time Tracking of the Human Body. IEEE Trans. on Pattern Analysis and Machine Intelligence 19, 780-785.