1. Introduction

The Canadian Heritage Information Network (CHIN) is a special operating agency of the Federal Government’s Department of Canadian Heritage. CHIN is a national centre of excellence that provides a visible face to Canada’s heritage through the world of networked information. CHIN’s mission is to promote the development, the preservation, and the presentation of Canadian digital cultural content. CHIN’s network contains over 1,200 museum members across Canada.

CHIN has two main public products through which cultural content and services are served. CHIN’s Knowledge Exchange is an on-line participative space for museum professionals. It offers a variety of services and tools, including communities of practice, collaborative tools, courses, case studies, tip sheets, interviews, Web casts, on-line working groups, and more.

The Virtual Museum of Canada (VMC) brings Canada’s rich and diverse heritage into Canadian homes and schools. The VMC gives member museums the ability to reach Canadians and an international audience via the Internet by featuring a large image repository of over 600,000 digital artefacts, over 200 virtual exhibits and games, access to learning resources and lesson plans, and a guide to Canadian museum services and events. With over 41 million visits from more than 170 countries since its launch in March 2001, the VMC is now established as one of the world’s premier heritage gateways.

While always looking to evolve and further advance our products for the public, CHIN also attempts to undertake initiatives which will benefit our membership. Many CHIN members are medium or small institutions which may not have the necessary resources to undertake certain activities. As such, capacity building, knowledge transfer and facilitation are key objectives of CHIN when undertaking any project.

1.1 Working with Images

The Canadian Heritage Information Network’s Virtual Museum of Canada (VMC) is currently being redesigned. Work on the evolution of the VMC began in late fall 2007 with a target to publicly launch the new product in fall 2008. One of the concept directions of the new VMC is to help users find the content they desire in as simple and quick a manner as possible. To address this goal, new and alternative browsing and searching technologies and methodologies were investigated for potential implementation within the new VMC. This led to a partnership between CHIN and the National Research Council of Canada (NRC), and the implementation of the NRC’s Nefertiti technology within the VMC’s Image Gallery.

The VMC’s Image Gallery provides users with access to over 600,000 images. Users will be able to browse and search images through the VMC’s navigation, search engine, and content featuring. With Nefertiti’s inclusion, users will be able to visually search, and refine searches, for images based on colour and shape, as well.

The use of this technology is relevant in a number of ways. The 600,000 images found within the VMC’s Image Gallery are contributed to Artefacts Canada by heritage institutions across Canada. Having a number of institutions (over 1,200) contribute data results in some inconsistencies in metadata. This invariably affects the findability of images, as metadata is typically used by search engines to retrieve images. Nefertiti operates on the physical appearance of an image and, as such, the validity and quality of metadata is no longer an issue. This provides an excellent alternate method of accessing content for VMC users.

Additionally, VMC user analysis has revealed a multitude of user types. Each user type has unique behaviour patterns. Providing the ability to visually retrieve content may appeal to certain specific user types. It also ensures that language is not a barrier.

The collections of any museum or heritage institution can be vast. The ability to find images and return result sets through colour and shape can be very relevant as both an internal system for professionals, and a public system, on an institution’s Web site. By incorporating Nefertiti within the VMC’s Image Gallery, CHIN’s members get a first-hand and in-depth look at how such a technology operates and is used. CHIN Members are also privy to research conclusions, statistics, reports and case studies.

1.2 Working with 3D

While many struggle to get up to speed with Web 2.0, there has already been great discourse and discussion about “Web 3D”. Currently, the VMC has some 3D content in the Inuit 3D Exhibition created by the Canadian Museum of Civilization in March 2001. The 3D technology used within this exhibition was provided by the NRC and was considered very progressive six years ago.

With the forthcoming launch of the new VMC, several technologies were investigated to enhance the way cultural content is presented to the public. Working with the NRC, the McCord Museum and the Canadian Space Agency (CSA), CHIN decided to undertake a 3D research project.

Artefacts from the McCord and the CSA are digitized to create 3D models. These models are then presented in a context through a narrative called Explore: From Yesterday to Tomorrow. The project comprises complementary virtual and physical components.

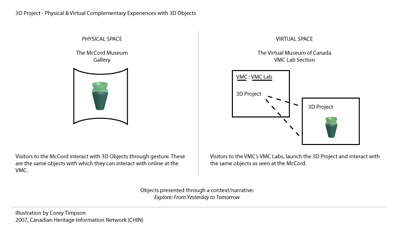

Fig 1: a visual outline of the 3D project concept

The physical component takes place at an installation within the McCord museum. 3D artefacts onscreen can be manipulated by users through gesture. Hand gestures are motion-captured and translate onscreen as movements of the 3D objects.

The virtual component will be in the VMC Lab – a section of the new VMC which will present experimental research projects. Users will enjoy the same experience and content as in the physical space, and use the mouse for manipulating the scaled 3D objects.

There are multiple objectives for this project. It is expected that in the future, visits to virtual museums will be richer experiences through the use of 3D technologies that can mimic natural, in-person experiences. The new VMC will have a modest sampling of 3D content for its launch; it will provide invaluable data for future projects.

A case study will be conducted to document and analyze user interaction behaviour, technology performance, and the complementary use of the VMC on-line and in-house McCord components. The case study will be published on CHIN’s Knowledge Exchange (KX) which is available to all CHIN members.

The 3D artefacts will also be ready for inclusion as the first 3D content in Artefacts Canada – a repository of national cultural content containing over 3 million records and images from hundreds of museums across Canada.

2. General Considerations on Images

Pictures and images are of the utmost importance in virtual collections. They are (and will remain in the foreseeable future) the easiest, fastest and most economical means for creating virtual collections. Furthermore, most three-dimensional models are covered with textures. Textures constitute an important visual descriptor for viewers and convey essential historical, artistic and archaeological information.

Images are difficult to describe: they convey vast amounts of complex and ambiguous information. Ambiguity results when the three-dimensional world is projected as a two-dimensional image and when the illumination of this world is arbitrary and cannot be controlled. Because of this ambiguity and complexity, it is difficult to segment images and to understand them. For these reasons, we propose a statistical approach in which the overall composition of the image is described in an abstract manner.

2.1 Indexing and Retrieval of Images

We now depict our algorithm. The colour distribution of each image is described in terms of hue and saturation. This colour space imitates many characteristics of the human visual system. The hue corresponds to our intuition of colour (e.g. red, green or blue), while saturation corresponds to the colour strength (e.g. light red or deep red).

Next, a set of points is sampled from the image. A quasi-random sequence generates the points. Each point of this sequence becomes the centre of a structuring element. For each centre position, the pixels inside the corresponding structuring element are extracted, and the associated hue and saturation images are calculated. The statistical distribution of the colours within the window is characterized by a bidimensional histogram. This bidimensional histogram is computed and accumulated for each point of the sequence – the current histogram is the sum of the histograms at the current and at the previous position. From this process, a compact descriptor or index is obtained.

This index provides an abstract description of the image’s composition; in other words, of the local distribution of colours throughout the image. This is very important. This index does not represent a global description of the image, nor is it based on a particular segmentation scheme. Instead, it characterizes the statistics of colour distribution within a small viewing area that is moved randomly over the image. Consequently, there are no formal relations between the different regions: the different components of a scene can be combined in various ways and still be identified as parts of the same scene. That is why that algorithm is robust against occlusion, composition, partial view and viewpoint. Nevertheless, this approach provides a good level of discrimination.

The maxim ‘an image is worth a thousand words’ illustrates the difficulty of describing an image by means of words alone. For that reason, our retrieval approach is based on the so-called ‘query by example’ or ‘query by prototype’ paradigm. To this end, we created a search engine that can handle such queries. In order to initiate a query, the user provides an image or prototype to the search engine. This prototype is described or indexed and the latter is compared with a metric from a database of pre-calculated indexes which correspond to the virtual collection’s images. The search engine finds the most similar images with respect to the prototype and displays them to the user. Here, the user’s own sense of recognition determines the preceding search results: he chooses the most meaningful image from the results provided by the search engine and reiterates the query process from the chosen image. The process is repeated until convergence is achieved.

2.2 Application to the EROS Database

The C2RMF – Centre de recherche et restauration des musées de France – has been a pioneer in applying new technologies in the field of cultural heritage. The C2RMF’s participation in the cultural heritage field began in 1989 and involved the high-quality digitization of images via a modified Thomson-Broadcast flatbed scanner developed for the NARCISSE project. There was subsequently direct digital imaging and panoramic viewing of objects, direct 3-D acquisition of the surface of paintings, and finally, multispectral colour reconstruction.

All these techniques give us an enormous amount of data and information to organize and exploit. The information is focused on scientific and technical data. This includes indexing vocabularies, study reports, restoration reports, digital data from quantitative analysis, spectra, graphs, chemical formulae, UV, infrared, raking light photography, and scanning electron microscopy images. As computation capability continues to grow at a steady rate, user requests for new features and usability improvements are correspondingly advanced. The C2RMF has integrated these new advanced techniques and updated the EROS system.

The EROS system is organized in several parts: the storage back-end, the relational database, the image server, the middleware and the Web server. The data is stored on 15TB HP RAID hard disk racks managed by a file server:

- the metadata related to the works of art; the images; the reports; the analysis; the analytical reports; the restoration reports; the conservation surveys; the chemical, structural, isotopic and molecular quantitative and qualitative analytical results; and published papers;

- high definition digital images (photographic films taken with different techniques such as infra-red, X-ray and ultra-violet light, detailed cross-sections, electron microscopy views, graphs, spectra, multispectral images, panoramic views and 3-D models);

- feature vectors for 2-D and 3-D image content recognition for automatic classification and image category retrieval.

The EROS system is an Open Source project available under the GNU Public License (GPL). It is based on powerful and industry-leading free software such as Linux, Apache, MySQL, PHP, and now Ruby and the framework RubyOnRails. Web access is via a W3C standard-compliant client such as Mozilla Firefox.

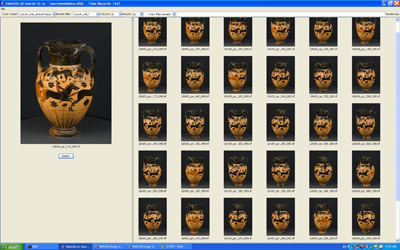

Figure 2 shows the retrieval of all the views related to a single Chalcidian amphora from a sole view. This amphora, hosted at the Musée du Louvre (Paris, France), was made during the Archaic Greek Period (620-480 B.C.) and was found in the South of Italy. This figure is an important example as the database user might be unaware that multiple views of the same amphora are stored in the database.

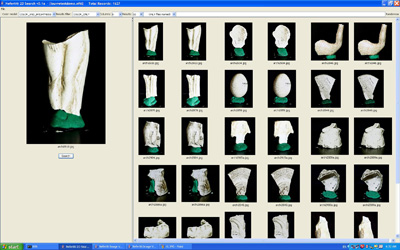

Our last example of pictorial interrogation is shown in Figure 3. This example is typical of situations in which one wants to retrieve a certain group of pictures that are very similar but that do not necessarily correspond to the same artefact. Such a group can be formed, for instance, from various figurines representative of a particular style and made from the same kind of material. Such a situation is illustrated in Figure 3.

The reference image represents a broken Venus from which other sculptures of the same style are retrieved. The Venus belongs to the Gallo-Roman Period and was made between 40 B.C. and 300 A.D. All the figurines are made of ceramic and pottery. Currently, they can be found at the Musée du Chatillonnais (Chatillon-sur-Seine, France). Unless the database is very well documented, such a query is extremely difficult without a content-based system because the database user must have some knowledge both of the artefact per se and of the period. Once more, our results were validated with a textual query in the EROS Database.

Our results have shown the efficiency of our algorithms. In many situations, content-based retrieval has proven itself to be not only a complement to text-based retrieval, but also a sine qua non condition for efficient retrieval. In any case, synergy between text-based and content-based data should be exploited to the maximum

At this stage, about 600,000 paintings have been indexed at low resolution and 14,000 at high resolution. The low-resolution calculations have been performed on a high-end laptop while the indexes for the high-resolution paintings have been calculated in Paris on the C2RMF server. The fact that 600,000 paintings can be indexed on a laptop shows the optimisation of the algorithms.

3. Demotride 3D Viewer

Demotride is a 3D scene viewer that allows visualization of, and interaction with, 3D objects and scenes on the computer screen. Designed to be user-friendly, Demotride respects visualization parameters defined by content developers. It works with content files based on the VRML/X3D international standards (International Organization for Standards, 1997; Ibid, 2005). These standards for 3D scene description have been chosen because they are open; widely known and used; well documented; well supported by 3D content development tools; and freely available on the Web. Furthermore, these standards allow anyone to create 3D content by simply using a text editor such as Notepad.

Demotride also allows interaction with multimedia content, including text, images, sounds and animations.

Demotride's technology is used in research on human-computer interaction (HCI) for several projects. As an HCI testbed, it is used for rapid prototyping and evaluation of new 3D user interfaces.

Although Demotride's technology is generic and can be adapted to many uses, it could be particularly useful for scientific visualization and virtual tours and presentations, such as virtual museums.

Demotride is also freely available on the Web for personal, and academic, as well as research and development, purposes. Since its first public launch in 2004, several new versions have been released on a regular basis, each time adding functionality and improving its usability. The latest version offers unique features such as the ability to control key rendering and interaction parameters.

Demotride’s technology is used to conduct experiments in HCI (Lapointe et al, 2007; Savard and Lapointe, 2006) as well as to test 3D interaction techniques for specific content; for example, by combining the Inuit3D virtual museum (Corcoran et al, 2002) with a new joystick-based navigation interface, or by developing space-time interaction techniques for 3D content (El-Hakim et al, 2006).

This viewer has been designed from the start for usability on the Web – to maximize effectiveness, efficiency and user satisfaction for Web users who are interested in visualizing and interacting with virtual environments on the Web in a simple and easy manner.

In order to achieve these goals, we worked to maintain a minimum download size (currently under 1 MB), in order to reduce download time and make the technology accessible to all Internet users, including those using dial-up connections. Developed by the National Research Council of Canada, Demotride is currently available in French and English, the two official languages of Canada.

Demotride is an evolving software: the aim of future releases will be to further increase its functionality and usability.

3.1 Application for Museums

Apart from the applications mentioned before, we recently used Demotride’s technology to demonstrate proof-of-concept gesture-based interaction with 3D content on a large display. Using interactive gesture-based kiosks to display 3D content to museum visitors has a lot of potential to attract their attention.

From a Web perspective, virtual visitors can also benefit from 3D content through the use of interactive 3D visualization technologies. There is, however, a need to better assess the capability of these technologies to improve visitors’ experience and satisfaction.

With that in mind, we are currently collaborating with the McCord Museum and the Canadian Space Agency to further improve the usability of interactive 3D visualization technologies on the Web. The idea is to develop a systematic evaluation method to measure the usability of these technologies for museum applications.

Such a method will allow the objective measurement of the usability of 3D viewers and as such, will allow us to improve these tools. These improvements will benefit both users and the overall development of the field, with all the socio-economic impacts expected for this new form of media.

Finally, CHIN is interested in using Demotride as a viewing technology for the creation of a 3D component within their VMC Lab on the new Virtual Museum of Canada site. In this way, we can offer a glimpse into the world of 2020, 2050, and beyond. The interactive capabilities of the viewer will allow visitors to see, experience, interact with and manipulate environments of the future.

4. Conclusion

Imagine a collections manager browsing through thousands of images, unconcerned with language or metadata, concerned only with the physical appearances of the images. Or imagine a 9-year-old browsing through images on a museum Web site, unconcerned with spelling and syntax. Imagine a museum visitor or research student, hundreds of kilometers away from a museum, rotating, zooming in, and playing with an ancient artefact. Both Nefertiti and Demotride (available on the Web at http://www.demotride.net) offer incredible potential to heritage institutions, both for professional operational purposes, and for greatly enriching the experience of their visitors.

This paper has briefly presented some technologies that the Canadian Heritage Information Network (CHIN) is using in collaboration with the National Research Council of Canada (NRC), the McCord Museum, and the Canadian Space Agency (CSA). By incorporating these research projects on to their Web sites, CHIN allows its Web visitors to search and interact with digitized artefacts in new ways. At the same time, this provides CHIN with the ability to collect valuable research on new technology, user behaviours, and the relationship between complementary virtual and physical exhibitions, and to compile this and share it with its network of member museums.

Acknowledgements

The authors wish to acknowledge the core teams of both the VMC Nefertiti and the 3D Explore: From Yesterday to Tomorrow projects for their great work and collaboration from concept through implementation, and study. Also, thanks to the McCord Museum and Nicole Vallières; and the Canadian Space Agency and Marie-France Fortier for partnering with the 3D project. The authors also wish to thank Scott Cantin for his work in editing this paper.

References

Corcoran, F., J. Demaine, M. Picard, L.-G, Dicaire, J. Taylor. INUIT3D: An Interactive Virtual 3D Web Exhibition. Museums and the Web 2002: Proceedings. Ed. J. Trant and D. Bearman. Boston, Massachusetts, USA. April 17-20, (2002). Available: http://www.archimuse.com/mw2002/papers/corcoran/corcoran.html The Interactive Virtual 3D Web Exhibition is also available on the Web: http://www.civilization.ca/aborig/inuit3d/inuit3d.html

El-Hakim, S. F., G. MacDonald, J.-F. Lapointe, L. Gonzo, M. Jemtrud. "On the Digital Reconstruction and Interactive Presentation of Heritage Sites through Time". Proceedings of the 7th International Symposium on Virtual Reality, Archaeology and Intelligent Cultural Heritage (2006). October 30 – November 4, 2006, Hilton Nicosia, Cyprus, pp. 243-250.

ISO: ISO/IEC14772-1:1997. Information technology - Computer graphics and image processing -- The Virtual Reality Modeling Language (VRML) - Part 1: Functional specification and UTF-8 encoding. International Organization for Standards (1997).

ISO, ISO/IEC19776-2:2005(E). Information technology - Computer graphics, image processing and environmental data representation - Extensible 3D (X3D) encodings. Part 2: Classic VRML encoding. International Organization for Standards (2005).

Lapointe, J.-F., and P. Savard. "Comparison of Viewpoint Orientation Techniques for Desktop Virtual Walkthroughs", Proceedings of the IEEE International Workshop on Haptic Audio Visual Environments and their Applications (HAVE 2007). Ottawa, Ontario. October 12-14, 2007. pp. 33-37.

Savard, P., J.-F.Lapointe. "Conception d’un outil pour l’étude des techniques de déplacement en environnement virtuel". Actes de la 18e conférence francophone sur l’interaction humain-machine. IHM 2006, 18 au 21 avril 2006, Montréal, Québec, Canada, pp. 269-272.