Introduction

We present two completed exhibits as examples of immersive museum experiences. Navigable with a Wii controller, the immersive system inspires user interest and interactive learning in virtual, 3-dimensional worlds. Immersive systems are uniquely adaptable to many types of museum content and provide the public something not accessible from home. The system is transportable and made from off the shelf components. It portrays 3D environments via five screens that wrap around the subject's position, delivering a kinesthetic response that ties vision to a bodily sense of space. Surround sound audio further builds spatial reference and ambience. Similar to a gaming experience, the virtual environment is intuitively designed, thus highly attractive to viewers of all ages and not limited by one's physical mobility. The projects presented here are collaborative creations by artists and scientists at the Visualization Lab, Texas A&M University. They were exhibited at SIGGRAPH 2008, Los Angeles, and SIGGRAPH 2009, New Orleans. The exhibit Atta, 2008, maps tunnels and chambers of a vast leafcutting ant colony. An 8x8 meter Ground Penetrating Radar scan was translated into a 3d model. Our team was the first to use GPR to map a living ant colony. The exhibit I'm not there, 2009, explores animal sensing by simulating a stroll through a natural landscape, seeing in ultraviolet frequencies, and hearing in ultrasonic and infrasonic ranges. Frequency scaling techniques were employed to translate audio signals to the range of human perception, and coloration was mapped to signals in the ultraviolet range. In the immersive exhibit, we observed viewers actively listening to an animal call, and using the Wii to navigate toward it, in order to link the sound of the animal with its appearance. This is difficult to do in the wild. The immersive system offers situations that teach by demonstration and delight, providing viewers an opportunity to experience phenomena heretofore impossible to imagine.

Project 1: I'm not there: extending the range of human senses to benefit wildlife corridors

How many experiences do we miss - either through inattention, or our own limitations - when walking through the woods, or diving with scuba mask and flippers? All around us, animals communicate and perceive with senses quite different from our own, having evolved from particular survival and reproductive needs. Just as mankind has employed technology to overcome limitations of physical strength, dexterity, and distance, so also can we imagine technologies that enable us to extend our senses by taking cues from birds, whales, and other animals. What motivates our study is a wish to encourage empathy with and curiosity about other species, the environment, and our place within it.

What results from the study of morphology and behavior of earth's creatures is often more questions. Such questions provide a rich ground for exploration, and can lead to inventions not yet imagined. This project uses scientific research as the basis for a system designed to increase human appreciation of the natural environment. Our ultimate ambition is to create an interactive real-time, global, subscription-based, "nature channel." The project would provide freedom to roam remote places with enhanced senses and, as a result, benefit wildlife corridors around the world.

Scope of the project

At the conference Special Interest Group on Computer Graphics and Interactive Techniques (SIGGRAPH) 2009, New Orleans, we presented an immersive experience that allows one to navigate a remote island environment, home to terrestrial and marine animals. The system offered different modes combining navigation, sight, and sound developed from scientific studies of selected animals. Our landscape, navigable with a Wii controller, provided sensing abilities that extend the range of human perception through simulating that of the subject species. One could see in the ultraviolet spectrum and hear ultrasound and infrasound animal and insect vocalizations.

Ultimately, we envision a real-time version of the project, with broad geographic scale and audience. Imagine a system of image and audio capture stations across the world sending high-definition views and sounds of the last unspoiled environments to your digital television. In cinematic terms, high definition panoramas would form the establishing shots for your personal interactive wildlife movie. Then the system accesses close up views through other lenses, modified to present ultraviolet or infrared images, and audio beyond human range.

Wireless capture stations placed in areas traversed by local fauna would transmit motion-activated images in real time, modified for enhanced senses. If such an approach became popular on a large scale, it could lead to greater awareness and support for protection of wildlife corridors so essential to the survival of threatened species. This technology would afford citizens the ability to both "be there" and "not be there" - allowing greater habitat and greater freedom of movement for animals. If the system were offered on a subscription basis, income could finance the purchase and maintenance of land to expand the extent of wildlife corridors.

SIGGRAPH 2009

Fig 1: Visitors to SIGGRAPH travel through a virtual landscape using a Wii controller

The elements of our prototype demonstration for SIGGRAPH were displayed on a 5-screen interactive immersive system. The immersive system portrays space from the subject's viewpoint, resulting in a kinesthetic response that ties vision to a bodily sense of space. Surround sound audio further builds spatial reference and ambience (LaFayette et al, 2009).

The terrain for our demonstration is an island that affords both terrestrial and marine environments. A particular island in the pacific, several hundred miles southwest of Costa Rica, serves as a model. Cocos Island, near the Galapagos, is home to a number of species ideal for this study. 3D models of the island terrain and habitat were collected and prepared for the immersive system.

Representative fauna were distributed throughout the terrain. Content for the immersive system was developed through studies of scientific research on animal sensing. Audio content was derived from existing recordings of animal vocalizations. Color palettes for 3D models of terrain, flora, and fauna resulted from a study of current image-capture technology, so that reproduction of different light wavelengths were consistent with existing imaging techniques developed to represent these wavelengths.

To parallel eventual development of a networked, real-time system, our study on visual / aural translation and delivery was linked to technologies for real time capture and dissemination. In the process, we also experimented with mapping senses, not only our repertoire, but those we are not capable of perceiving.

Mapping animal senses

A finch flies over a field of daisies decorated with multicolored patterns. At high speed, she flies through a grove of trees. If she were human, the branches would be going by so fast they'd blur, but she sees each distinctly. She perches on a branch and cocks an eye at the sky, where her ability to discern very slowly moving objects lets her track the sun sinking toward the horizon, assuring her intended path. A fluorescent porch light on a nearby house looks like a strobe light to her. Bright blue shapes below are the beaks of her chicks crying for food.

One of the fundamental questions we explored relates to how animal senses might be mapped to human experience. There are four categories of sensing to consider:

- senses shifted below or above human range, for example, infrared and ultraviolet vision, or infrasonic and ultrasonic hearing

- senses amplified in intensity when experienced by animals: heightened hearing, vision, touch, and smell

- senses mapped in unique ways by animals: sound as a locative sense, or smell to determine directionality

- senses not in our perceptual repertoire, for example, electric fields sensed by sharks, or chemical cues read by insects.

More research is needed to examine how various senses interact, combine, and are hierarchized in animals and humans, as well as how they are processed after stimuli are received. For example, it's possible to imagine sound translated from beyond the ear's frequency range, but what would it be like to sense electrical fields like a shark?

Examples of scientific research tied to this project--vision

Many birds have evolved a fourth visual receptor for enhanced perception of detail and color. The ultraviolet spectrum aids birds in sexual selection - certain species show a preference for intensely colored ultraviolet (UV) markings. Other birds' UV markings are less noticeable or absent, allowing camouflauge from predators (Johnsgard, 1999). The UV reflectance of the cuckoo's eggs and those of the birds whose nests they parasitize turn out to be closely matched (Lundberg, 2004).

Fig 2: Cuckoo and nest on immersive system

Using buttons on the Wii controller, the immersive system can toggle between normal sight and sound and ultraviolet-enhanced coloration with ultrasonic and infrasonic sound. Where one might see intensely colored birds in normal light, one can discern a camouflaged bird through ultraviolet cues.

Examples of scientific research tied to this project - hearing

Tiger moths can respond to the ultrasound sonar that bats use to locate prey with ultrasound clicks of their own. Thus tiger moths warn bats about their bad taste. But other moth species are found to mimic the ultrasounds of the toxic moths (Conner, 2008).

Fig 3: Virtual landscape with moth

Whales use infrasound to transmit calls indicating the location of food, such as krill concentrations, over vast areas of ocean. Thus, whales in areas where food is sparse can be helped to conserve energy in seeking out food-rich areas (Lavoie, 1999).

Highlighting examples such as the use of ultrasound for predation or defense and infrasound for signaling location, the immersive system offers situations that teach by demonstration, providing viewers an opportunity to explore particular instances of enhanced senses.

The Immersive Visualization System

The immersive system used for these exhibits consists of five adjacent rectangular rear-projection screens arranged in a semi-circle around the viewer. For this system, each of these screens presents a 36" by 48" view into the virtual environment. The seam between adjacent screens is about one-eighth of an inch. Collectively these screens provided a 4-foot high, 12-foot wide 180-degree semi-circular surrounding 'window' into the virtual space.

Each display screen is driven by its own graphics computer. These five computers are networked and share common data describing the virtual environment displayed. The images displayed from each computer are synchronized in both time and space to present a unified wide-angle surrounding viewing experience.

Viewer interaction with the displayed virtual environment is by means of a Nintendo Wii game controller. The Wii controller communicates with the immersive system using a Bluetooth wireless link. By manipulating the Wii controller, the viewer can move through the virtual environment and also change viewing direction. Buttons on the controller allow the viewer to select viewing options such as normal daylight or ultraviolet light or the visibility of specific elements in the environment. Controller buttons also enable selection of normal sounds or ultrasonic sounds.

The immersive system used was developed to be relatively low cost, utilizing off-the-shelf components. It consists of five replicated modules plus a Nintendo Wii controller and a few interconnection components. Each module consists of a graphics computer, a rear-projection screen and its support, an XGA (1024x768 resolution) commodity image projector and its support stand, and an 18" by 24" mirror and its support stand. The mirror is used to 'fold' the projection path for the image. This minimizes the overall footprint of the system. The interconnection components consist of an Ethernet switch and various network, video, and power cables. Cost to replicate this system at today's prices would be $25,000-$30,000 depending on the specific components selected.

The immersive system supports a stereographic mode where each display has both a right eye image and a left eye image, giving a true sense of depth. The right eye and left eye images are separated based on color. Stereo viewing uses glasses with red and cyan color filters. Other schemes for presenting left and right eye images are possible; for example, using liquid crystal shutter glasses or polarizing filters. However, these approaches significantly increase system costs. A system using shutter glasses would cost about $45,000 because of the need for more capable projectors and additional hardware to very carefully synchronize images across the multiple screens.

Since this system is modular, it is relatively easy to disassemble and move to alternate locations. We have twice successfully moved this system to distant locations - once to Los Angles and once to New Orleans. Our experience is that it takes about 8 hours to carefully take the system apart and load it into a van for transport. At the other end it takes about 6 hours to reassemble the system and make it operational again.

The basic modular system design concept is adaptable to other screen configurations and other display technologies. We have developed a seven-screen system similar to the one described above, but using large panel LCD displays rather than rear projections screens. We have also developed systems using trapezoidal shaped screens, forming dome-like immersive displays (Parke, 2002; Parke, 2005).

Using Sound in the Context of Visualization

The immersive system originally used for the Atta project, 2008, was extended for I'm not there, 2009, with the addition of surround sound. The sound system is similar to a 5.1 surround home theatre product, except the speakers are of higher fidelity than is typical, and they are arranged in a pentagonal shape to improve directionality. A single inexpensive computer is used as an audio server and acts in a coordinated fashion with the graphics servers to move sounds locked to their respective visual objects.

The addition of sound enhances the sense of immersion by completely surrounding the viewer and by providing ambient environmental sounds. In addition, the system helps the visitor find animals by placing their sounds properly in the sound stage, thereby giving both direction and distance cues. This is important because some of the animals are very small and in any case may be hidden by other objects in the scene.

Most dramatically, the system allows for the simulation of the extended auditory range many of the animals have. All of the sounds used in I'm not there are derived from research and field study recordings of the actual animals being depicted. As a first step, the recordings are edited and processed to lower the apparent noise level. Next, for each animal individual sounds are spliced together to create a loop of that animal's sounds - one to two minutes long. Because the sound loops are so long and each has a unique length, the visitor is not aware of sounds repeating. Finally, various techniques are used to simulate the extended ultra- and infrasound capabilities found in many of these animals. In some cases, field study recordings that used special hardware to shift the natural extended frequencies into the range of human hearing were found. In other cases, recording studio effects were used to, for example, synthesize high frequency signals to be added to the natural recording. Depending on user-selected mode, either the natural or the treated loop is heard.

Future visualization efforts using immersive systems can also be enhanced with the use of sound. This is sometimes called "sonification." In general, hearing offers unique features relative to vision. First, although we don't have so-called "X-ray vision," we do have a similar capability for hearing. We take for granted our ability to hear through closed doors or into a car engine in need of repair. Next, we can hear in all directions without having to physically move our heads. The natural response is to use hearing as a sort of "early warning" system and then to focus our visual attention on a given source for further analysis. Finally, when an environment has multiple related sound sources, the auditory system does a much better job of analyzing the ordering and timing relationships among events.

While natural sounds were used in I'm not there, sonification can also be used to present abstract data. For example, a simulated deep-water ocean exploration could assign different tones to temperature, pressure, salinity, and pollutants. Visitors could then monitor those gradients even as they are left free to look about.

Project 2: Atta texana: A View Underground

Fig 4: Atta colony volumetric model on immersive system at SIGGRAPH New Tech Demos, 2008

One of Texas's smallest natives is also one of its largest: ants. Myrmecologists refer to ant colonies as superorganisms. Atta texana, indigenous to Texas and Louisiana, harvests tree leaves to farm a fungus in vast, underground cavities that can spread over more than an acre of land and reach to great depths, with over a million ants in residence (Wade 1999; Hölldobler and Wilson, 1990). Excavated leafcutting ant nests have proven large enough to contain a 3-story house (Moser, 1963). Scientists believe the ants' symbiotic relationship with the fungus could lead to discoveries in medicine and sustainable agriculture (Foster 2002; Hölldobler and Wilson, 1990).

Previous attempts to model ant colonies have been undertaken by myrmecologist Walter Tschinkel, whose technique involves pouring casting material into the nest, digging it up and piecing it back together. Tschinkel has stated, however, that an Atta colony is so large this technique would be quite a challenge (McClintock, 2003; Tschinkel, 2008). Another means to map ant colonies involves using a backhoe to scrape away successive layers of soil and measuring the diameter of the holes. This results in a kind of abstract image composed of disconnected shapes. Tunnels collapse with this method and cannot be tracked (Moser, 2006).

If measurement is the goal, either approach could be used. But our goal was to gain a holistic view of this subterranean architecture using a method that would not disturb the colony. Ground Penetrating Radar (GPR) provided a means to map the Atta nest. Using GPR, high frequency radar pulses are sent from a surface antenna into the ground. Elapsed time between when the pulse is transmitted-reflected from buried materials or sediment and soil changes - and when it is received, is measured. The sender and receiver are moved along the surface, following transects of a grid (Conyers, 2004). Typical uses of GPR include mapping buried archaeological ruins, and locating unmarked graves, unknown caverns, earthquake faults, and lost pipes or power lines.

Geophysicist Carl Pierce worked with us to scan a portion of the site. It was a vast area, but only a small section of the entire nest. It took three days to cover the 8-meter grid in 10-centimeter slices. To create the surface model from the GPR data, categories of density values were grouped and translated into layers representing different densities, from void to solid rock. As a result, one could open a file in the modeling program and enable successive layers surrounding the voids to reveal tunnel structure and fungus caches. We were excited to see the 3D model of the Atta colony on screen, to zoom into it and travel through the tunnels and chambers. Scale changes were necessary for the viewer to be transformed into the size of an ant surrounded by the tunnel architecture. With the help of Lauren Simpson, graduate Visualization Sciences student and artist, a portion of the colony model was prepared for viewing on a large-scale, immersive visualization system designed by Professor Fred Parke in the Texas A&M University Visualization Lab (Parke 2002; 2005). Lauren carved away a portion of the model so that it could be textured, lit, and animated.

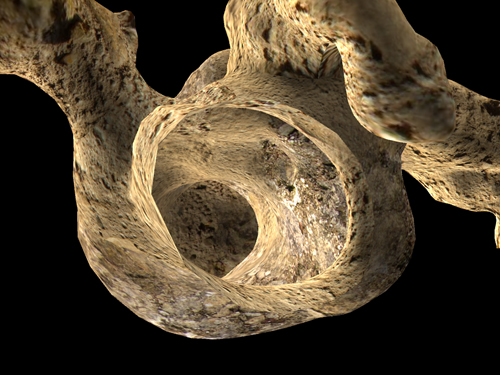

Fig 5: A portion of the ant tunnel prepared for immersive display

SIGGRAPH 2008

The immersive system provided a near-infinite ability to zoom out of and into the 3D ant colony. The tunnel fragment could appear like a tiny crumb floating in air, or one could move inside the nest to view details and tunnel architecture. The overall exterior and interior structure can thus be perceived in a seamless way (LaFayette, Parke, 2008).

Fig 6: Realistic 3d model of a tunnel fragment on the immersive system

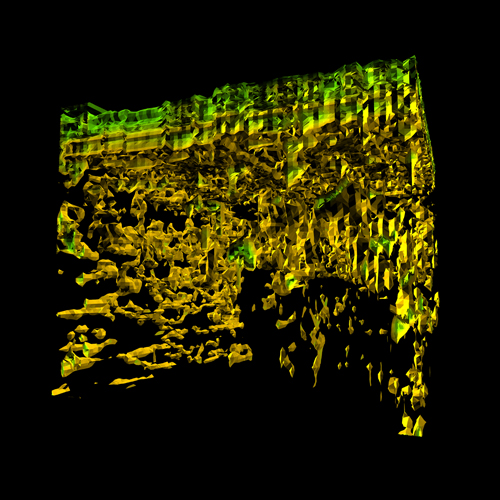

The data cube (representing the entire scan) appeared like a block of Swiss cheese. One could set it spinning, advance to the interior of the model, and back out. One could also enable and disable layers surrounding the voids, or view only voids, to reverse figure and ground - air was represented as a solid object - the basis for the entire structure. The GPR translation process may be applicable to other projects with similar parameters.

Figure 7: Polygonal model of Atta texana colony from GPR scan.

Calder-like in its coloration and form, the data cube seemed like a work of abstract art. Both cube and tunnel files were displayed using anaglyphic stereovision, augmenting a sense of immersion. Color corrections were needed to maintain enough red and cyan in each object, while retaining as much non-stereo color harmony as possible.

A Wii controller was used to interactively navigate the immersive scene. It provided a "home" state, a start and return point. Moving through the models with the Wii, one could enable and disable layers and stereovision, and control viewing orientation and location.

Conclusion

Through projects such as these - a result of collaborative interactions between scientists and artists - immersive experiences for museums are affordable, easy to deploy and use, interactive, and intuitive. Immersive displays provide young and old a kinesthetic experience not limited to the physically mobile. It is an experience that cannot be paralleled with desktop or handheld devices. The system can accommodate content from any research area, can expand the range of offerings for the host institution, and can travel to be shared among several venues.

Acknowledgements

For the Atta project, 2004-2008, Carl J. Pierce, M.S., P.G., Geophysics, St. Lawrence University, directed GPR scanning and filtration and contributed related text. Frederic Parke, Ph.D., Department of Visualization, Texas A&M University, consulted on data translation and related text. Graduate assistant Tatsuya Nakamura, Software Developer, Starz Animation, Toronto, contributed programming, modeling, and related text. Lauren Simpson, MSVS candidate, contributed modeling and animation production. I'm grateful for advice from Maria Tchakerian, Research Assistant, and Bob Coulson, Professor of Entomology, Department of Entomology, Texas A&M University. The project was supported by the Texas A&M Vice President for Research, The Texas A&M Academy for the Visual and Performing Arts, and the Visualization Laboratory.

For the project I'm Not There, 2009, Frederic Parke extended the immersive system software to support enhanced sound and ultraviolet vision. Philip Galanter contributed sound design for ultrasonic and infrasonic content, and programming to allow spatial audio location relative to the Wii controller. Ann McNamara contributed 3D modeling for the marine scene. Contributing researchers include Dr. Michael Greenfield, Lesser Wax Moth, Universití François Rabelais de Tours, France; Dr. Michael Smotherman, Mexican Free-tailed bat, Texas A&M Department of Biology; Dr. Thierry Lints, Zebra Finch, Texas A&M Department of Biology; Dr. Innes Cuthill, bird vision, School of Biological Sciences, University of Bristol; Dr. Peggy Hill, bird vocalization, University of Tulsa; Professor Julian Partridge, Ecology of Vision, School of Biological Sciences, University of Bristol; John Hildebrand, Scripps Institution of Oceanography.

The immersive system was developed under a grant from the Texas A&M Vice President for Research. Extensions to the immersive system software were partially funded by the National Science Foundation under MRI grant CNS-0521110. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

Data and documentation is freely available for noncommercial purposes. Contact PI, Carol LaFayette, Associate Professor, Department of Visualization, TAMU 3137, College Station TX 77843-3137. Email: lurleen@viz.tamu.edu. (c)2007 Texas A&M University

References

Conner, W.E. (2008). Tiger Moths and Woolly Bears: Behavior, Ecology, and Evolution of the Arctiidae, 186. New York: Oxford Press.

Conyers, L.B. (2004). Ground Penetrating Radar for Archaeologists. Lanham MD: AltaMira.

Foster, D. (2002). “Small Matter”s. Smithsonian Magazine, May, 81.

Hölldobler, B. and E.O. Wilson (1990). The Ants. Cambridge: Belknap Press of Harvard University Press, 601, 598.

Johnsgard, P.A. (1999). “The Ultraviolet Birds of Nebraska”. Nebraska Bird Review Sept. 67:3, 103-105.

Lundberg, B. (2004). “True Colors”. Nature 8, April. 428.

LaFayette C.L., F.I. Parke, P. Galanter and A. McNamara (2009). I'm Not There: Extending the Range of Human Senses to Benefit Wildlife Corridors. SIGGRAPH: ACM 2009 International Conference on Computer Graphics and Interactive Techniques, ACM SIGGRAPH 2009 Art Gallery, New Orleans, Louisiana SESSION: Information Aesthetics Showcase, 23, 2009. http://www.siggraph.org/s2009/galleries_experiences/information_aesthetics/index.php

LaFayette, C.L, and F.I. Parke (2008). Atta texana: a view underground. ACM SIGGRAPH New Tech Demos, Los Angeles, 6. http://www.siggraph.org/s2008/attendees/newtech/23.php

Lavoie, D,, and Y. Simard (1999). “The rich krill aggregation of the Saguenay-St. Lawrence Marine Park: hydroacoustic and geostatistical biomass estimates, structure, variability and significance for whales”. Can. J. Fish. Aquat. Sci 56, 182-197.

McClintock, J. (2003). “The Secret Life of Ants”. Discover Magazine 24, 11.

Moser, J. C. (1963). “Contents and Structure of Atta texana Nest in Summer”. Annals of the Entomological Society of America 56, 3, 286-291.

Moser, J. C. (2006). “Complete Excavation and Mapping of a Texas Leafcutting Ant Nest”. Annals of the Entomological Society of America 99:5, 891-897.

Parke, F.I. (2005). “Lower Cost Spatially Immersive Visualization for Human Environments”. Landscape and Urban Planning, 73:2-3, 234-243.

Parke, F. I. (2002). Next Generation Immersive Visualization Environments. Proc. SIGraDi Conference, Caracas, Venezuela, November

Tschinkel, W. (2008). Correspondence: "Actually, folks have made a cast of an Atta nest. You can see it in a German film called Ameisen, available on DVD (with an English version). The cast was of an Atta vollenweideri nest in Argentina. It took 10 tons of concrete and two months to excavate. Unfortunately, the cast couldn't be moved and was excavated in place on soil pillars. It is very impressive."

Wade, N. (1999). “For Leafcutter Ants, Farm Life Isn't So Simple”. New York Times, Aug, 8, F1.